Build AI products powered by GPT-3.5 Turbo or Qwen3-Flash - no coding required

Start buildingGPT-3.5 Turbo vs Qwen3-Flash

Compare GPT-3.5 Turbo and Qwen3-Flash. Build AI products powered by either model on Appaca.

Get startedModel Comparison

| Feature | GPT-3.5 Turbo | Qwen3-Flash |

|---|---|---|

| Provider | OpenAI | Alibaba Cloud |

| Model Type | text | text |

| Context Window | 16,385 tokens | 1,000,000 tokens |

| Input Cost | $0.50/ 1M tokens | $0.02/ 1M tokens |

| Output Cost | $1.50/ 1M tokens | $0.22/ 1M tokens |

Build with it | Build with GPT-3.5 Turbo | Build with Qwen3-Flash |

Build AI products powered by any model

Appaca is a platform that enables you to create AI tools and agents. Choose the best model for your product and launch to customers.

Multi-Model Support

Power your AI product with GPT-3.5 Turbo, Qwen3-Flash, or any supported model. Switch anytime.

No Infrastructure Needed

We handle all API integrations. You focus on building your AI product, not managing keys.

Launch & Monetize

Build once, sell to customers. Appaca handles payments, hosting, and scaling.

No credit card required • Build your first AI product in minutes

Strengths & Best Use Cases

GPT-3.5 Turbo

OpenAI1. Extremely low-cost text model

- One of the cheapest legacy models available.

- Suitable for very high-volume workloads with simple requirements.

2. Good for lightweight NLP tasks

- Classification, summarization, rewriting, paraphrasing, intent detection.

- Works for simple logic tasks and short reasoning sequences.

3. Works well for basic chatbots

- Optimized for Chat Completions API, originally powering early ChatGPT use cases.

- Good for rule-based or templated conversation flows.

4. Stable and predictable outputs

- Legacy behavior makes it suitable for systems built years ago that rely on its quirks.

- Good for backward compatibility or long-term enterprise pipelines.

5. Supports fine-tuning

- Useful for teams maintaining older fine-tuned GPT-3.5 models.

- Allows domain-specific compression of older datasets.

6. Limited capabilities compared to newer models

- No vision, no audio, no streaming, and no function calling.

- Much weaker reasoning and correctness vs GPT-4o mini or GPT-5.1.

7. Small context window (16K)

- Limited for multi-document tasks or long conversations.

- Best used for short, simple prompts or structured tasks.

8. Recommended migration path

- OpenAI explicitly recommends using GPT-4o mini instead.

- 4o mini is cheaper, smarter, faster, multimodal, and far more capable.

Qwen3-Flash

Alibaba Cloud1. Enhanced Flash-generation performance

- Better factual accuracy and reasoning.

2. Very inexpensive

- Perfect for high-volume automation and micro-agents.

3. Hybrid thinking mode

- Not typical for small models.

4. Large context capacity

- Up to 1M tokens.

Prompts to Get Started

Use these prompts to power AI products you build on Appaca. Each works great with the models above.

Best for GPT-3.5 Turbo

textMarketing-to-Sales Enablement Training (USP Talk Track)

Create a training program for the sales team to communicate your USP and address persona challenges with consistent messaging and proof.

Exit Ticket Creator

Generate quick formative assessments that gauge student understanding and inform next-day instruction.

Confirm Proper Citation Format (Bluebook/OSCOLA/etc.)

Review a legal document for citation format issues and propose precise corrections without changing substantive meaning.

Best for Qwen3-Flash

textMarketing Attribution Model (Measure What Works)

Design an attribution model to measure how USP-focused campaigns influence persona engagement, conversion, and retention.

E-commerce Product Description

Create persuasive, SEO-friendly product descriptions that convert visitors into buyers.

Twitter/X Thread Generator

Create viral Twitter threads that educate, entertain, and grow your following with compelling hooks and strategic formatting.

What AI product will you build?

Describe your AI product idea and Appaca will help you create it - powered by GPT-3.5 Turbo, Qwen3-Flash, or any model you choose.

Free to start • No coding required • Launch to customers

See how Appaca works

Turn your AI ideas into AI products with the right AI model

Appaca is the complete platform for building AI agents, automations, and customer-facing interfaces. No coding required.

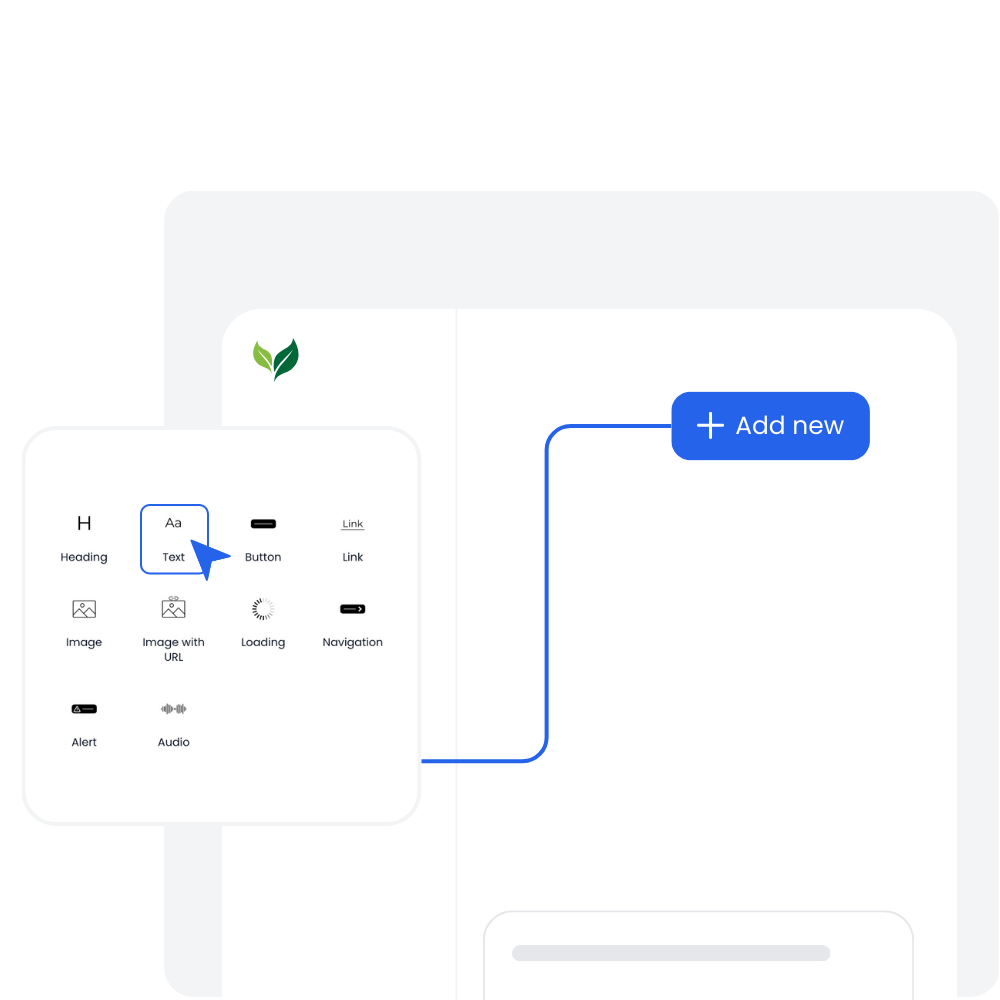

Customer-facing Interface

Create and style user interfaces for your AI agents and tools easily according to your brand.

Multimodel LLMs

Create, manage, and deploy custom AI models for text, image, and audio - trained on your own knowledge base.

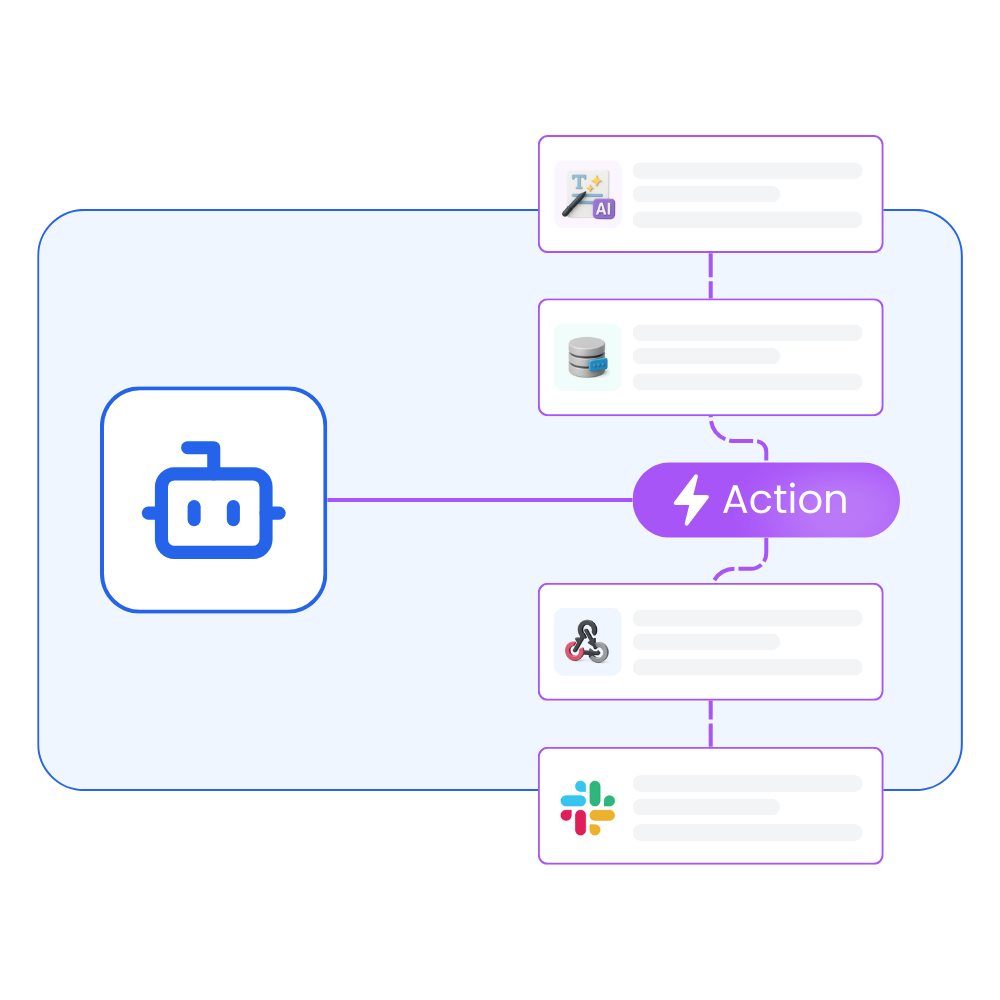

Agentic workflows and integrations

Create a workflow for your AI agents and tools to perform tasks and integrations with third-party services.

Trusted by incredible people at

All you need to launch and sell your AI products with the right AI model

Appaca provides out-of-the-box solutions your AI apps need.

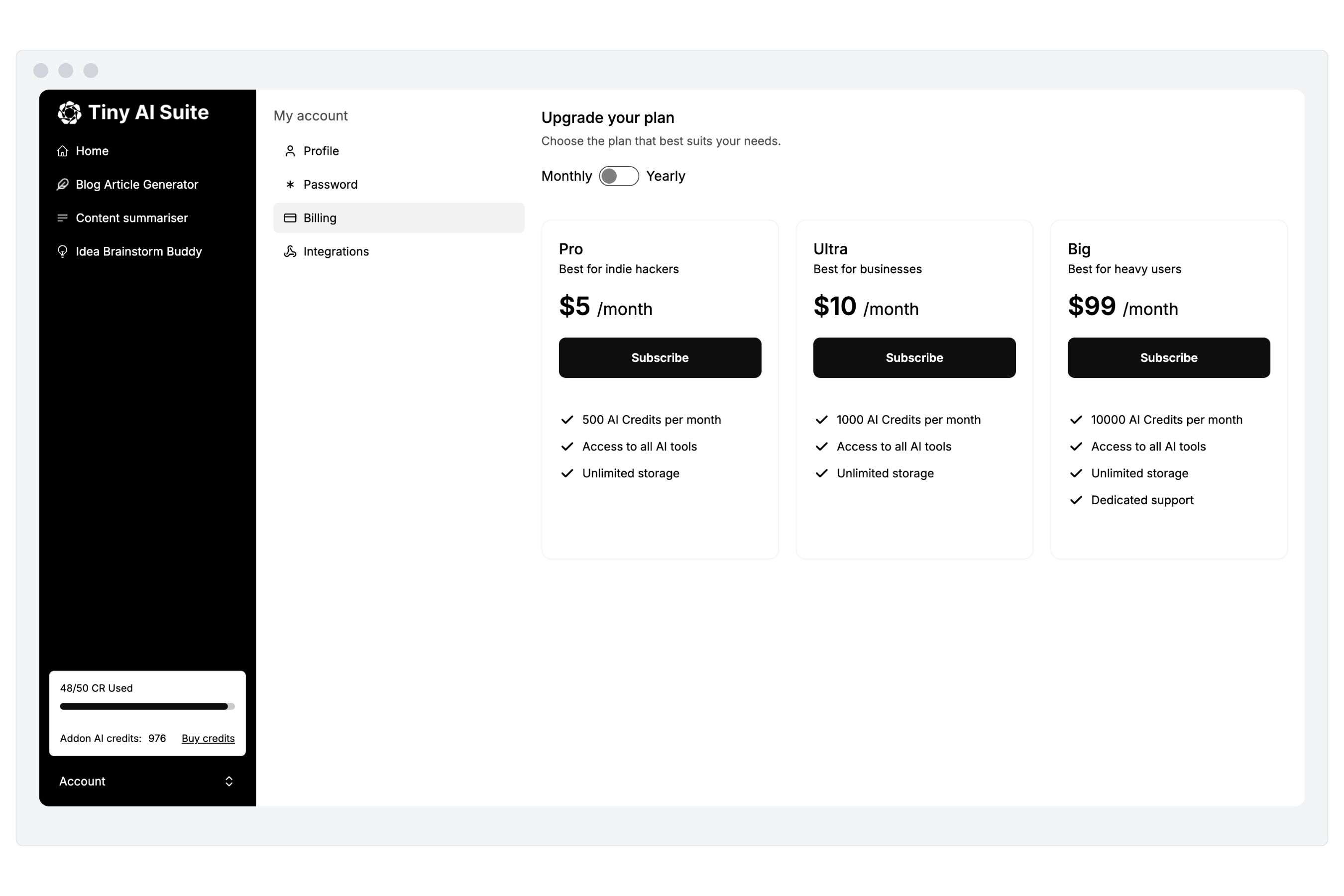

Monetize your AI

Sell your AI agents and tools as a complete product with subscription and AI credits billing. Generate revenue for your busienss.

“I've built with various AI tools and have found Appaca to be the most efficient and user-friendly solution.”

Cheyanne Carter

Founder & CEO, Edubuddy

Frequently Asked Questions

We are here to help!

Build AI products powered by GPT-3.5 Turbo or Qwen3-Flash

Create AI tools and agents on Appaca. Choose your model, build your product, launch to customers.