Build AI products powered by Grok 4 or Qwen3-Flash - no coding required

Start buildingGrok 4 vs Qwen3-Flash

Compare Grok 4 and Qwen3-Flash. Build AI products powered by either model on Appaca.

Get startedModel Comparison

| Feature | Grok 4 | Qwen3-Flash |

|---|---|---|

| Provider | xAI | Alibaba Cloud |

| Model Type | text | text |

| Context Window | 256,000 tokens | 1,000,000 tokens |

| Input Cost | $3.00/ 1M tokens | $0.02/ 1M tokens |

| Output Cost | $15.00/ 1M tokens | $0.22/ 1M tokens |

Build with it | Build with Grok 4 | Build with Qwen3-Flash |

Build AI products powered by any model

Appaca is a platform that enables you to create AI tools and agents. Choose the best model for your product and launch to customers.

Multi-Model Support

Power your AI product with Grok 4, Qwen3-Flash, or any supported model. Switch anytime.

No Infrastructure Needed

We handle all API integrations. You focus on building your AI product, not managing keys.

Launch & Monetize

Build once, sell to customers. Appaca handles payments, hosting, and scaling.

No credit card required • Build your first AI product in minutes

Strengths & Best Use Cases

Grok 4

xAI1. Flagship-level reasoning and math performance

- Designed for world-class reasoning depth, precision, and multi-step logical chains.

- Excels at STEM, mathematics, symbolic operations, proofs, and analytical workloads.

2. Powerful multimodal understanding

- Supports text, images, and other modalities.

- Handles cross-modal reasoning tasks requiring context synthesis.

3. Extreme capability across diverse tasks

- Positioned as a top-tier 'jack of all trades' model.

- Strong in natural language, coding, knowledge retrieval, and structured generation.

4. Large 256K context window

- Enables analysis of long documents, entire codebases, multi-document packs, and extensive agent sessions.

- Supports workloads that require persistent reasoning across large inputs.

5. Advanced developer tooling support

- Function calling for tool-augmented workflows.

- Structured outputs for predictable, schema-controlled generation.

- Integrates smoothly with agents and complex automation pipelines.

6. Efficient caching for cost reduction

- Cached input tokens discounted to $0.75 / 1M tokens.

- Encourages RAG, retrieval pipelines, and multi-step conversational workflows.

7. Production-ready performance

- Stable rate limits: 480 requests per minute.

- High token throughput: 2,000,000 tokens per minute.

- Available across multiple xAI regional clusters.

8. Optional Live Search augmentation

- Add-on: $25 per 1K sources.

- Enhances factual accuracy and real-time information retrieval.

Qwen3-Flash

Alibaba Cloud1. Enhanced Flash-generation performance

- Better factual accuracy and reasoning.

2. Very inexpensive

- Perfect for high-volume automation and micro-agents.

3. Hybrid thinking mode

- Not typical for small models.

4. Large context capacity

- Up to 1M tokens.

Prompts to Get Started

Use these prompts to power AI products you build on Appaca. Each works great with the models above.

Best for Grok 4

textQuiz & Assessment Question Generator

Generate diverse quiz questions at various difficulty levels with answer keys and explanations.

Video Tutorials (Implementation Walkthroughs)

Create video tutorials that teach your persona how to implement your USP solution against specific challenges with clear, actionable guidance.

Creative Short Story Generator

Generate unique short stories with compelling plots, diverse characters, and immersive settings.

Best for Qwen3-Flash

textBuild Emergency Fund

Calculate personalized emergency fund targets with this AI prompt, offering strategies to build a buffer without sacrificing essentials.

Lease Addendum Drafter (Permission + Restrictions)

Draft a professional lease addendum clause for special tenant requests (pets, installations, home business) with clear responsibilities and protections.

Meeting Notes Summarizer

Transform raw meeting transcripts or messy notes into clear, structured summaries with action items.

What AI product will you build?

Describe your AI product idea and Appaca will help you create it - powered by Grok 4, Qwen3-Flash, or any model you choose.

Free to start • No coding required • Launch to customers

See how Appaca works

Turn your AI ideas into AI products with the right AI model

Appaca is the complete platform for building AI agents, automations, and customer-facing interfaces. No coding required.

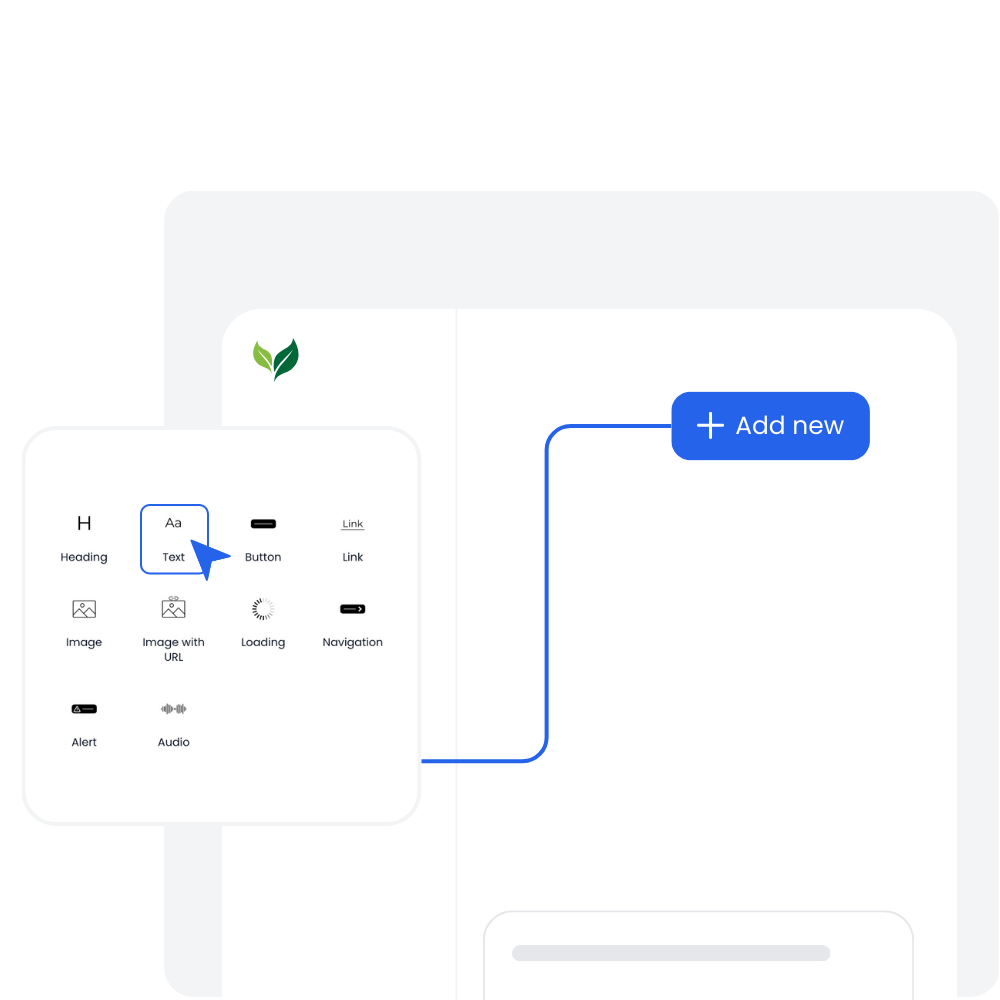

Customer-facing Interface

Create and style user interfaces for your AI agents and tools easily according to your brand.

Multimodel LLMs

Create, manage, and deploy custom AI models for text, image, and audio - trained on your own knowledge base.

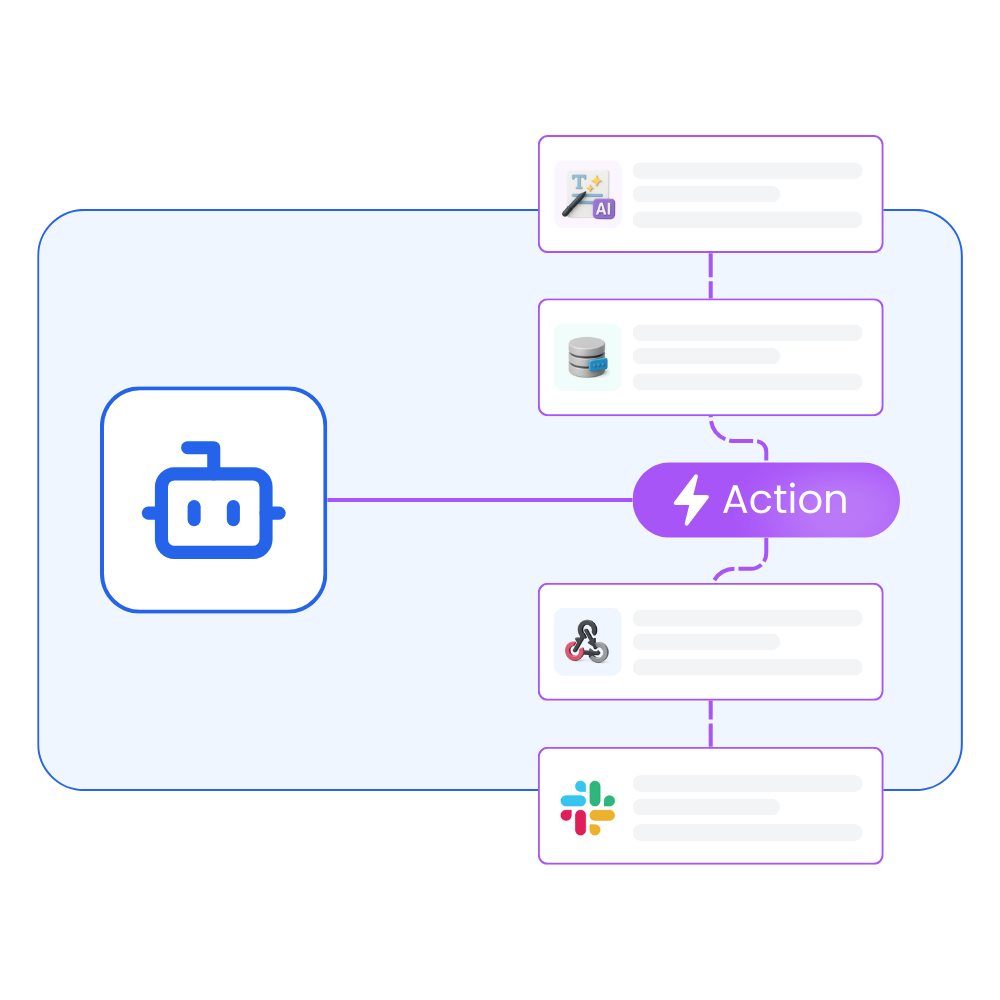

Agentic workflows and integrations

Create a workflow for your AI agents and tools to perform tasks and integrations with third-party services.

Trusted by incredible people at

All you need to launch and sell your AI products with the right AI model

Appaca provides out-of-the-box solutions your AI apps need.

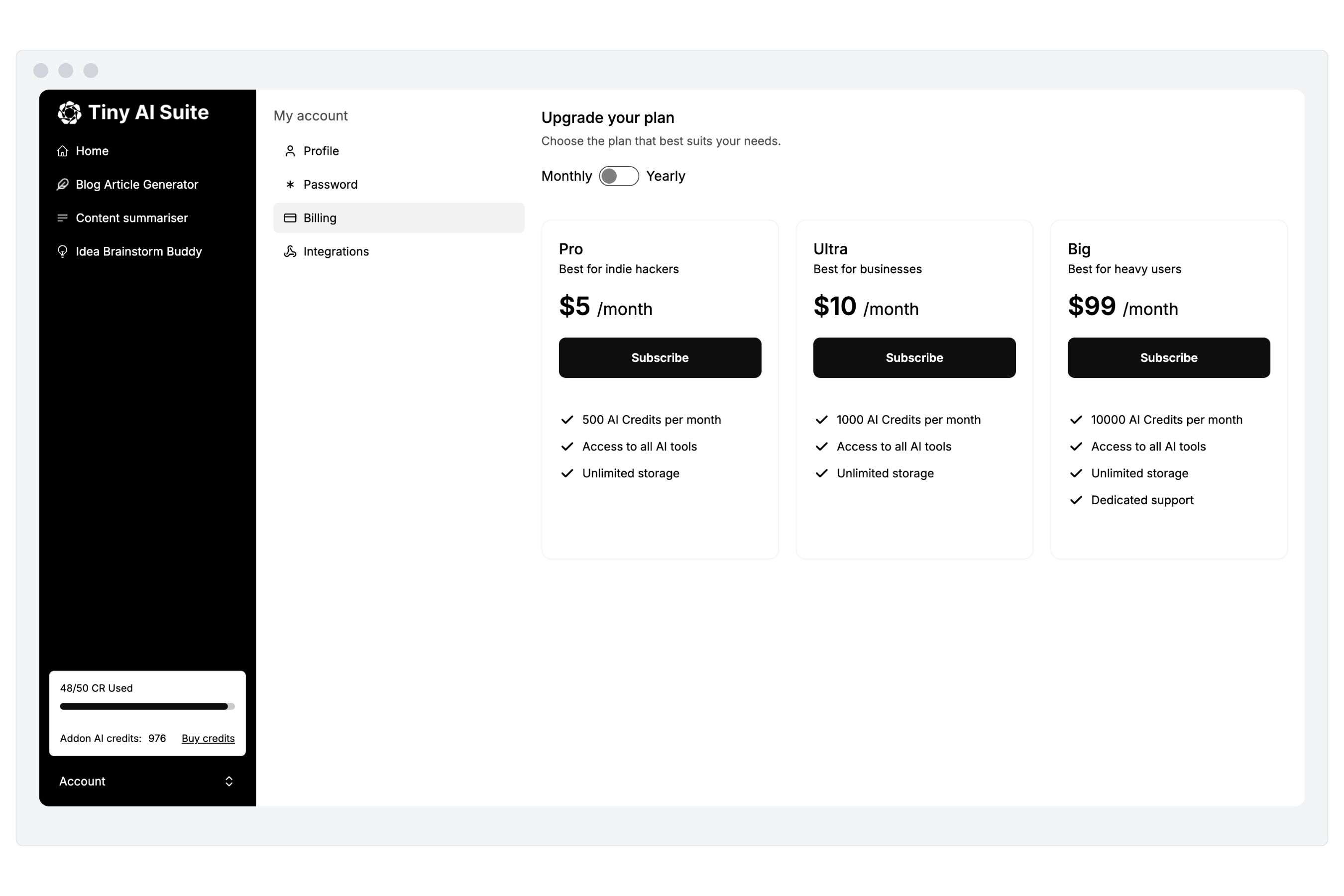

Monetize your AI

Sell your AI agents and tools as a complete product with subscription and AI credits billing. Generate revenue for your busienss.

“I've built with various AI tools and have found Appaca to be the most efficient and user-friendly solution.”

Cheyanne Carter

Founder & CEO, Edubuddy

Frequently Asked Questions

We are here to help!

Build AI products powered by Grok 4 or Qwen3-Flash

Create AI tools and agents on Appaca. Choose your model, build your product, launch to customers.