Build AI products powered by GPT-4.1 Mini or Qwen-Flash - no coding required

Start buildingGPT-4.1 Mini vs Qwen-Flash

Compare GPT-4.1 Mini and Qwen-Flash. Build AI products powered by either model on Appaca.

Get startedModel Comparison

| Feature | GPT-4.1 Mini | Qwen-Flash |

|---|---|---|

| Provider | OpenAI | Alibaba Cloud |

| Model Type | text | text |

| Context Window | 1,047,576 tokens | 1,000,000 tokens |

| Input Cost | $0.40/ 1M tokens | $0.02/ 1M tokens |

| Output Cost | $1.60/ 1M tokens | $0.22/ 1M tokens |

Build with it | Build with GPT-4.1 Mini | Build with Qwen-Flash |

Build AI products powered by any model

Appaca is a platform that enables you to create AI tools and agents. Choose the best model for your product and launch to customers.

Multi-Model Support

Power your AI product with GPT-4.1 Mini, Qwen-Flash, or any supported model. Switch anytime.

No Infrastructure Needed

We handle all API integrations. You focus on building your AI product, not managing keys.

Launch & Monetize

Build once, sell to customers. Appaca handles payments, hosting, and scaling.

No credit card required • Build your first AI product in minutes

Strengths & Best Use Cases

GPT-4.1 Mini

OpenAI1. Fast, Lightweight, and Cost-Efficient

- Designed for speed with low latency, making it ideal for high-volume, real-time applications.

- More affordable than larger GPT-4.1 and GPT-5 models, enabling scalable deployments.

2. Strong Instruction Following

- Excels at following structured instructions and producing concise, deterministic outputs.

- Suitable for assistants, command-style interfaces, and tools that require stable, predictable behavior.

3. Reliable Tool Calling & Structured Outputs

- Built with strong support for:

- Function calling

- Structured outputs (JSON, typed objects)

- Systematic workflows

- Ideal for automation, reasoning over parameters, and multi-step tool pipelines.

4. Multimodal Input (Text + Image)

- Accepts both text and image as input.

- Useful for tasks such as:

- Image captioning

- UI element reading

- Visual question answering

5. Text-Only Output for Clarity

- Outputs text only, ensuring clean and consistent results for:

- Data extraction

- Summaries

- Code comments

- Chat responses

6. Massive 1M-Token Context Window

- Supports 1,047,576 tokens, enabling:

- Long documents or books

- Large codebases

- Extensive conversation memory

- Great for long-context reasoning without requiring chunking.

7. Practical for Everyday AI Applications

- Sweet spot for:

- Customer support agents

- Content rewriting

- Lightweight analysis

- Classification and tagging

- Workflow assistants

- Recommended primarily for simpler use cases, with GPT-5 Mini suggested for more complex tasks.

8. Broad API Support

- Available across:

- Chat Completions

- Responses

- Realtime

- Assistants

- Other major API endpoints

- Compatible with long-context modes for large-scale retrieval and processing.

Qwen-Flash

Alibaba Cloud1. Ultra-fast, ultra-cheap

- Designed for mass-scale workloads.

- Excellent for rewriting, extraction, classification.

2. Limited reasoning but great utility

- High throughput, low latency.

3. Optional thinking mode

- Adds chain-of-thought when needed.

4. Supports context cache & batch calls

- Very cost-effective system design.

Prompts to Get Started

Use these prompts to power AI products you build on Appaca. Each works great with the models above.

Best for GPT-4.1 Mini

textListing Description Generator (Social + MLS)

Turn basic property details into compelling, SEO-friendly listing copy in two formats: short social teaser and a longer MLS/Zillow description.

Collaboration Outreach Request

Draft collaboration outreach messages for partnerships, co-marketing, podcasts, affiliates, and integrations-with clear value exchange and next steps.

Comprehensive Lesson Plan Creator

Design detailed, standards-aligned lesson plans with engaging activities, assessments, and differentiated instruction strategies.

Best for Qwen-Flash

textSales Objection Flipper: Reveal Hidden Pain Points

Convert common sales objections into underlying fears and create educational content ideas that overcome them before the sales call.

Legal Contract Summarizer

Summarize complex legal contracts into plain English to understand key terms, obligations, and risks.

Marketing Experimentation Framework (Test + Learn)

Create a marketing experimentation framework to test and optimize persona-targeted messaging and offers that highlight your USP and address challenges.

What AI product will you build?

Describe your AI product idea and Appaca will help you create it - powered by GPT-4.1 Mini, Qwen-Flash, or any model you choose.

Free to start • No coding required • Launch to customers

See how Appaca works

Turn your AI ideas into AI products with the right AI model

Appaca is the complete platform for building AI agents, automations, and customer-facing interfaces. No coding required.

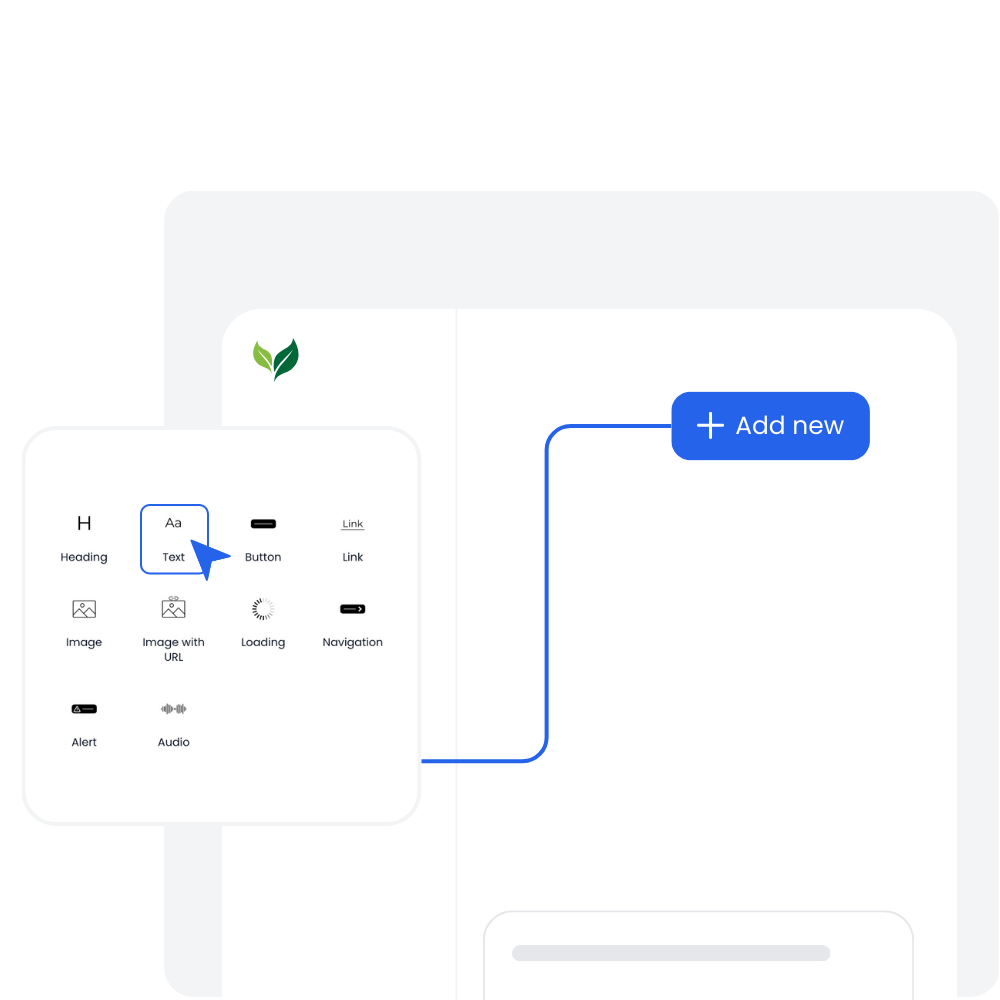

Customer-facing Interface

Create and style user interfaces for your AI agents and tools easily according to your brand.

Multimodel LLMs

Create, manage, and deploy custom AI models for text, image, and audio - trained on your own knowledge base.

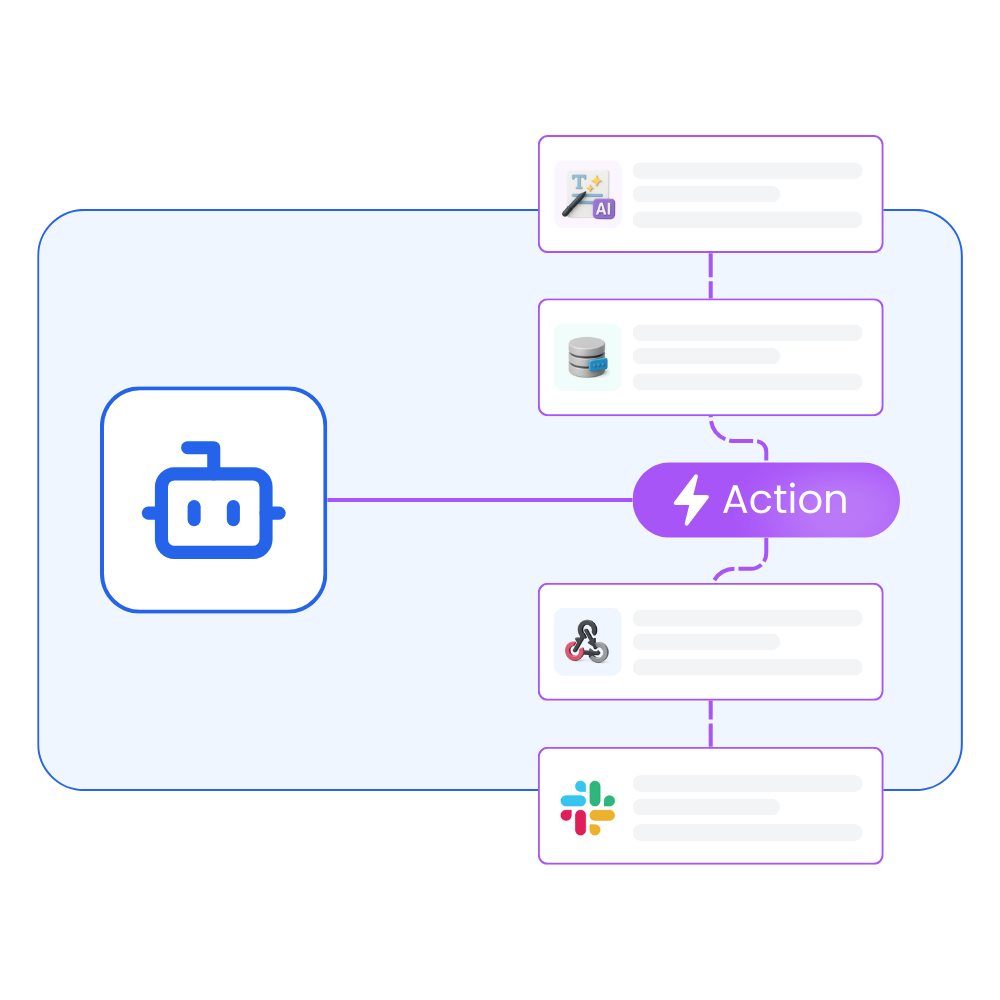

Agentic workflows and integrations

Create a workflow for your AI agents and tools to perform tasks and integrations with third-party services.

Trusted by incredible people at

All you need to launch and sell your AI products with the right AI model

Appaca provides out-of-the-box solutions your AI apps need.

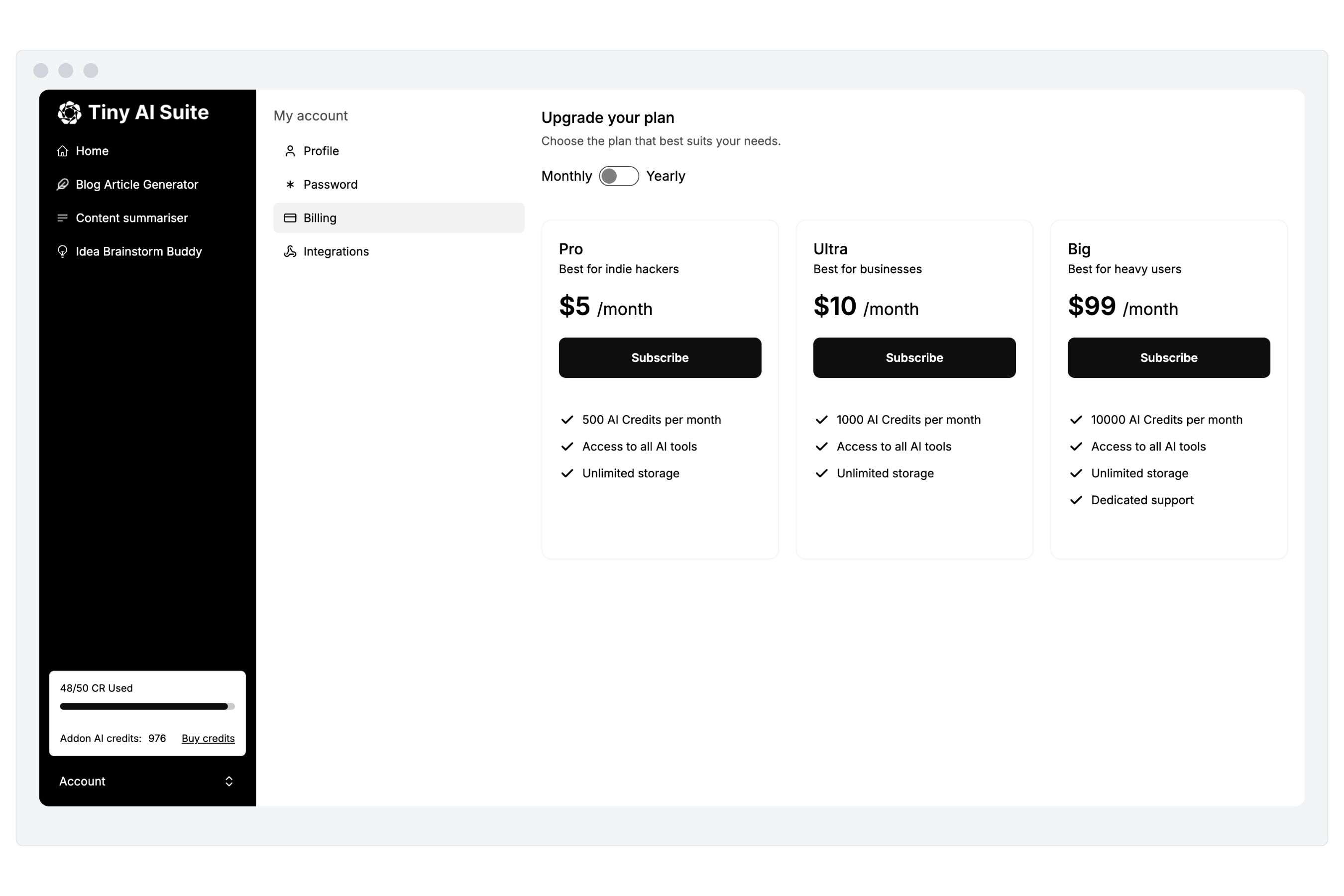

Monetize your AI

Sell your AI agents and tools as a complete product with subscription and AI credits billing. Generate revenue for your busienss.

“I've built with various AI tools and have found Appaca to be the most efficient and user-friendly solution.”

Cheyanne Carter

Founder & CEO, Edubuddy

Frequently Asked Questions

We are here to help!

Build AI products powered by GPT-4.1 Mini or Qwen-Flash

Create AI tools and agents on Appaca. Choose your model, build your product, launch to customers.