Build AI products powered by Claude 4.1 Opus or Grok 3 Mini - no coding required

Start buildingClaude 4.1 Opus vs Grok 3 Mini

Compare Claude 4.1 Opus and Grok 3 Mini. Build AI products powered by either model on Appaca.

Get startedModel Comparison

| Feature | Claude 4.1 Opus | Grok 3 Mini |

|---|---|---|

| Provider | Anthropic | xAI |

| Model Type | text | text |

| Context Window | 1,000,000 tokens | 131,072 tokens |

| Input Cost | $15.00/ 1M tokens | $0.30/ 1M tokens |

| Output Cost | $75.00/ 1M tokens | $0.50/ 1M tokens |

Build with it | Build with Claude 4.1 Opus | Build with Grok 3 Mini |

Build AI products powered by any model

Appaca is a platform that enables you to create AI tools and agents. Choose the best model for your product and launch to customers.

Multi-Model Support

Power your AI product with Claude 4.1 Opus, Grok 3 Mini, or any supported model. Switch anytime.

No Infrastructure Needed

We handle all API integrations. You focus on building your AI product, not managing keys.

Launch & Monetize

Build once, sell to customers. Appaca handles payments, hosting, and scaling.

No credit card required • Build your first AI product in minutes

Strengths & Best Use Cases

Claude 4.1 Opus

Anthropic1. Advanced Coding Performance

-

Achieves 74.5% on SWE-bench Verified, improving the Claude family's state-of-the-art coding abilities.

-

Stronger at:

- Multi-file code refactoring

- Large codebase debugging

- Pinpointing exact corrections without unnecessary edits

-

Outperforms Opus 4 and shows gains comparable to jumps seen in past major releases.

2. Improved Agentic & Research Capabilities

- Better at maintaining detail accuracy in long research tasks.

- Enhanced agentic search and step-by-step problem solving.

- Performs reliably across complex multi-turn reasoning tasks.

3. Validated by Real-World Users

- GitHub: Better multi-file refactoring and code adjustments.

- Rakuten Group: High precision debugging with minimal collateral changes.

- Windsurf: One standard deviation improvement on their junior dev benchmark - similar magnitude to Sonnet 3.7 → Sonnet 4.

4. Hybrid-Reasoning Benchmark Improvements

- Improvements across TAU-bench, GPQA Diamond, MMMLU, MMMU, AIME (with extended thinking).

- Stronger robustness in long-context reasoning tasks.

Grok 3 Mini

xAI1. Lightweight but thoughtful reasoning

- Designed to 'think before responding' with accessible raw thought traces.

- Excellent for logic puzzles, lightweight reasoning, and systematic tasks.

2. Extremely cost-efficient

- Only $0.30 per 1M input tokens and $0.50 per 1M output tokens.

- Cached token support lowers cost to $0.075 per 1M tokens.

3. Fast and responsive

- Optimized for low-latency applications and high-throughput use cases.

- Suitable for chatbots, assistants, and automation flows.

4. Supports modern developer features

- Function calling for tool-augmented workflows.

- Structured outputs for schema-controlled responses.

- Integrates cleanly with agents and pipelines.

5. Large 131K context window

- Can understand and work with long documents, transcripts, or multi-turn sessions.

6. Great for non-domain-heavy tasks

- Useful for summarization, rewriting, extraction, everyday reasoning, and app logic.

- Does not require domain expertise to operate effectively.

7. Compatible with enterprise infrastructure

- Stable rate limits: 480 requests per minute.

- Same API structure as all Grok 3 models.

8. Optional Live Search support

- $25 per 1K sources for real-time search augmentation.

Prompts to Get Started

Use these prompts to power AI products you build on Appaca. Each works great with the models above.

Best for Claude 4.1 Opus

textForum Insider: Emotional Pain Points + Empathy Statements

Analyze forum threads and social comments to uncover urgent problems, voice-of-customer language, and empathy statements for marketing copy.

Financial Statement Analysis

Analyze financial statements to understand company health, trends, and investment potential.

Review Miner: Extract Recurring Pain Points

Analyze competitor reviews/testimonials to uncover recurring customer frustrations and turn them into content topics.

Best for Grok 3 Mini

textConfirm Proper Citation Format (Bluebook/OSCOLA/etc.)

Review a legal document for citation format issues and propose precise corrections without changing substantive meaning.

Customer Advocacy Program (Activate Champions)

Create a customer advocacy program that turns satisfied customers into credible proof of your USP and a source of persona-aligned leads.

Content Hub (Central Resource Library)

Create a website content hub that centralizes resources related to persona challenges and positions your USP as the solution.

What AI product will you build?

Describe your AI product idea and Appaca will help you create it - powered by Claude 4.1 Opus, Grok 3 Mini, or any model you choose.

Free to start • No coding required • Launch to customers

See how Appaca works

Turn your AI ideas into AI products with the right AI model

Appaca is the complete platform for building AI agents, automations, and customer-facing interfaces. No coding required.

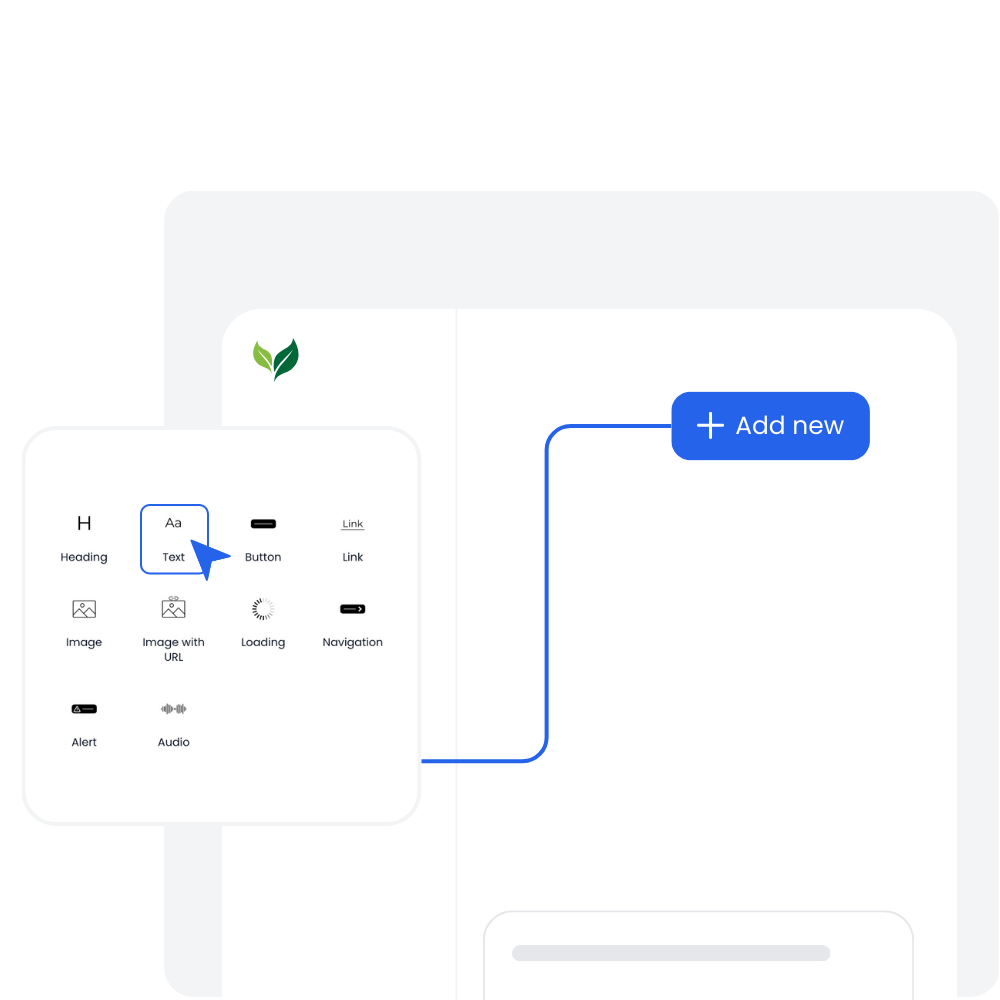

Customer-facing Interface

Create and style user interfaces for your AI agents and tools easily according to your brand.

Multimodel LLMs

Create, manage, and deploy custom AI models for text, image, and audio - trained on your own knowledge base.

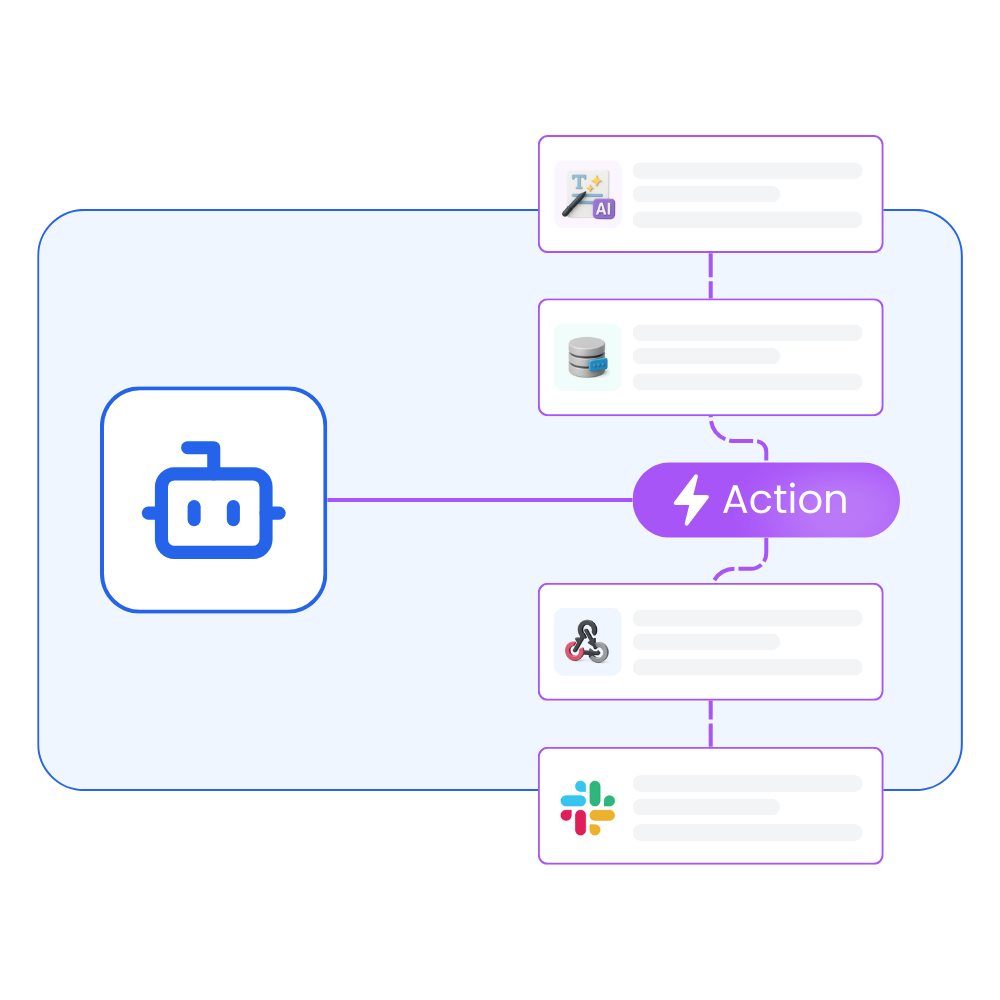

Agentic workflows and integrations

Create a workflow for your AI agents and tools to perform tasks and integrations with third-party services.

Trusted by incredible people at

All you need to launch and sell your AI products with the right AI model

Appaca provides out-of-the-box solutions your AI apps need.

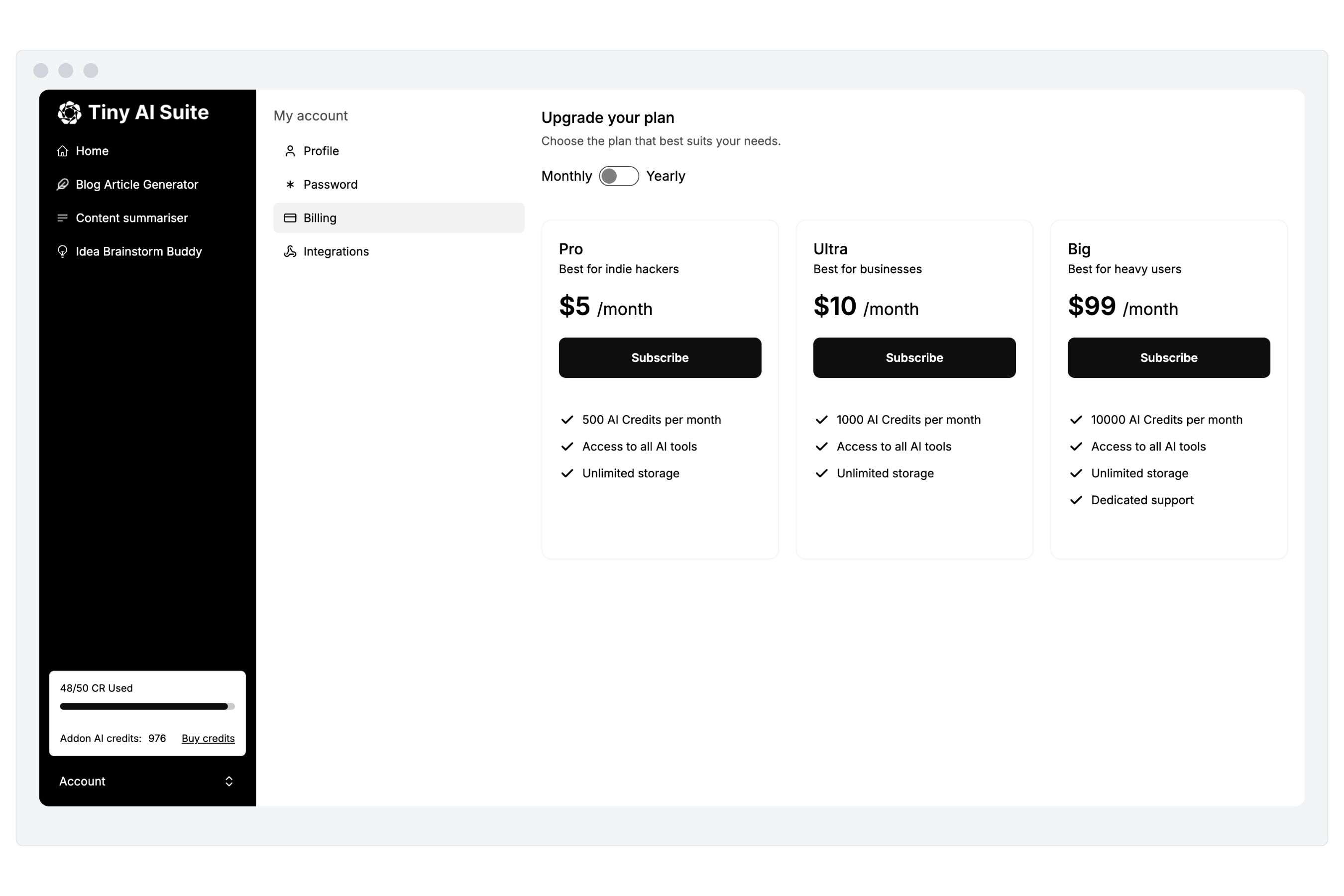

Monetize your AI

Sell your AI agents and tools as a complete product with subscription and AI credits billing. Generate revenue for your busienss.

“I've built with various AI tools and have found Appaca to be the most efficient and user-friendly solution.”

Cheyanne Carter

Founder & CEO, Edubuddy

Frequently Asked Questions

We are here to help!

Build AI products powered by Claude 4.1 Opus or Grok 3 Mini

Create AI tools and agents on Appaca. Choose your model, build your product, launch to customers.