Build AI products powered by GPT-4o mini Audio or Gemini 2.5 Flash - no coding required

Start buildingGPT-4o mini Audio vs Gemini 2.5 Flash

Compare GPT-4o mini Audio and Gemini 2.5 Flash. Build AI products powered by either model on Appaca.

Get startedModel Comparison

| Feature | GPT-4o mini Audio | Gemini 2.5 Flash |

|---|---|---|

| Provider | OpenAI | |

| Model Type | audio | text |

| Context Window | 128,000 tokens | 1,000,000 tokens |

| Input Cost | $0.15/ 1M tokens | $0.30/ 1M tokens |

| Output Cost | $0.60/ 1M tokens | $2.50/ 1M tokens |

Build with it | Build with GPT-4o mini Audio | Build with Gemini 2.5 Flash |

Build AI products powered by any model

Appaca is a platform that enables you to create AI tools and agents. Choose the best model for your product and launch to customers.

Multi-Model Support

Power your AI product with GPT-4o mini Audio, Gemini 2.5 Flash, or any supported model. Switch anytime.

No Infrastructure Needed

We handle all API integrations. You focus on building your AI product, not managing keys.

Launch & Monetize

Build once, sell to customers. Appaca handles payments, hosting, and scaling.

No credit card required • Build your first AI product in minutes

Strengths & Best Use Cases

GPT-4o mini Audio

OpenAI1. Affordable multimodal audio model

- Extremely low-cost audio + text model for production-scale usage.

- Ideal for startups and high-volume traffic apps.

2. Fast real-time performance

- Low latency suitable for responsive voice assistants, AI phone bots, IVR flows, and audio chat apps.

- Great when speed matters more than deep reasoning.

3. Audio input and audio output

- Accepts raw audio (speech, recordings, commands).

- Generates natural audio responses via the REST API.

4. Large 128K context window

- Handles long conversations, transcriptions, and extended instructions.

- Supports multi-step voice workflows or multi-part inputs.

5. Great for lightweight reasoning workloads

- Performs well for classification, instructions, Q&A, rewriting, and audio-driven tasks.

- Good for voice agents that don't need high-end reasoning like GPT-5.1.

6. Works across major endpoints

- Chat Completions, Responses API, Realtime API, Assistants, Batch.

- Supports streaming and function calling.

7. Scalable for commercial production

- Perfect for customer support hotlines, appointment bots, FAQ voice agents, or embedded voice UI in apps.

- Reliable and predictable output behavior given its price.

8. Preview model designed for experimentation

- Lets teams prototype voice-first features with minimal cost.

- Useful stepping-stone before upgrading to GPT-4o Audio or GPT-5 audio models.

Gemini 2.5 Flash

Google1. Highly cost-efficient for large-scale workloads

- Extremely low input cost ($0.30/M) and affordable output cost.

- Built for production environments where throughput and budget matter.

- Significantly cheaper than competitors like o4-mini, Claude Sonnet, and Grok on text workloads.

2. Fast performance optimized for everyday tasks

- Ideal for summarization, chat, extraction, classification, captioning, and lightweight reasoning.

- Designed as a high-speed “workhorse model” for apps that require low latency.

3. Built-in “thinking budget” control

- Adjustable reasoning depth lets developers trade off latency vs. accuracy.

- Enables dynamic cost management for large agent systems.

4. Native multimodality across all major formats

- Inputs: text, images, video, audio, PDFs.

- Outputs: text + native audio synthesis (24 languages with the same voice).

- Great for conversational agents, voice interfaces, multimodal analysis, and captioning.

5. Industry-leading long context window

- 1,000,000 token context window.

- Supports long documents, multi-file processing, large datasets, and long multimedia sequences.

- Stronger MRCR long-context performance vs previous Flash models.

6. Native audio generation and multilingual conversation

- High-quality, expressive audio output with natural prosody.

- Style control for tones, accents, and emotional delivery.

- Noise-aware speech understanding for real-world conditions.

7. Strong benchmark performance for its cost

- 11% on Humanity's Last Exam (no tools) - competitive with Grok and Claude.

- 82.8% on GPQA diamond (science reasoning).

- 72.0% on AIME 2025 single-attempt math.

- Excellent multimodal reasoning (79.7% on MMMU).

- Leading long-context performance in its price tier.

8. Capable coding assistance

- 63.9% on LiveCodeBench (single attempt).

- 61.9%/56.7% on Aider Polyglot (whole/diff).

- Agentic coding support + tool use + function calling.

9. Fully supports tool integration

- Function calling.

- Structured outputs.

- Search-as-a-tool.

- Code execution (via Google Antigravity / Gemini API environments).

10. Production-ready availability

- Available in: Gemini App, Google AI Studio, Gemini API, Vertex AI, Live API.

- General availability (GA) with stable endpoints and documentation.

Prompts to Get Started

Use these prompts to power AI products you build on Appaca. Each works great with the models above.

Best for GPT-4o mini Audio

audioVideo Marketing Strategy (Storytelling + Proof)

Build a video marketing strategy that uses storytelling to show how your USP transforms persona challenges into outcomes.

Customer Loyalty Program (Rewards + Advocacy)

Create a loyalty program that rewards continued engagement and advocacy, reinforcing how your USP supports ongoing persona challenges.

Social Media Content Calendar Generator

Generate a complete month of social media content ideas organized by platform, content type, and posting schedule.

Best for Gemini 2.5 Flash

textAccount-Based Marketing (ABM) Campaign (Tailored Plays)

Create an ABM campaign targeting high-value accounts with tailored messages that connect persona challenges to your USP.

Hotel vs Short-Term Rental: True Cost & Value Comparison

Compare the true total cost and business amenities of a hotel vs an approved short-term rental for longer stays.

Forum Insider: Emotional Pain Points + Empathy Statements

Analyze forum threads and social comments to uncover urgent problems, voice-of-customer language, and empathy statements for marketing copy.

What AI product will you build?

Describe your AI product idea and Appaca will help you create it - powered by GPT-4o mini Audio, Gemini 2.5 Flash, or any model you choose.

Free to start • No coding required • Launch to customers

See how Appaca works

Turn your AI ideas into AI products with the right AI model

Appaca is the complete platform for building AI agents, automations, and customer-facing interfaces. No coding required.

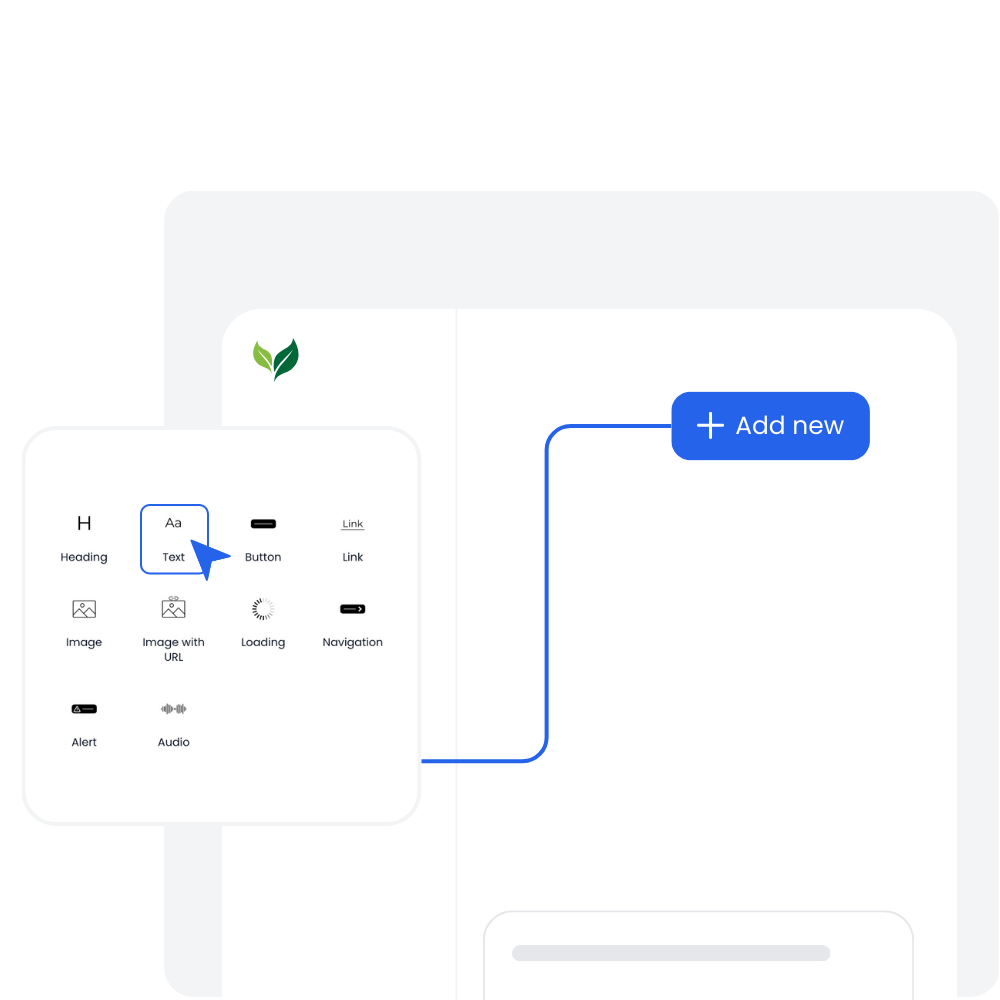

Customer-facing Interface

Create and style user interfaces for your AI agents and tools easily according to your brand.

Multimodel LLMs

Create, manage, and deploy custom AI models for text, image, and audio - trained on your own knowledge base.

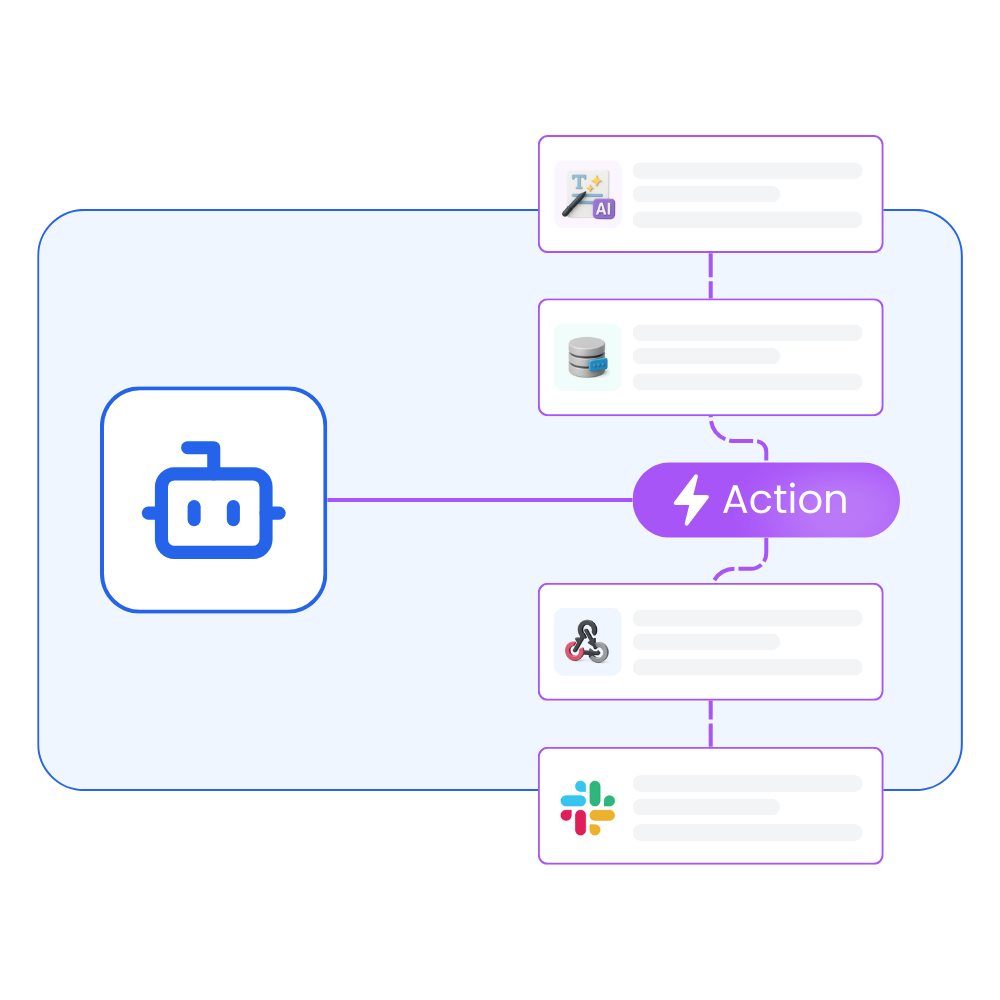

Agentic workflows and integrations

Create a workflow for your AI agents and tools to perform tasks and integrations with third-party services.

Trusted by incredible people at

All you need to launch and sell your AI products with the right AI model

Appaca provides out-of-the-box solutions your AI apps need.

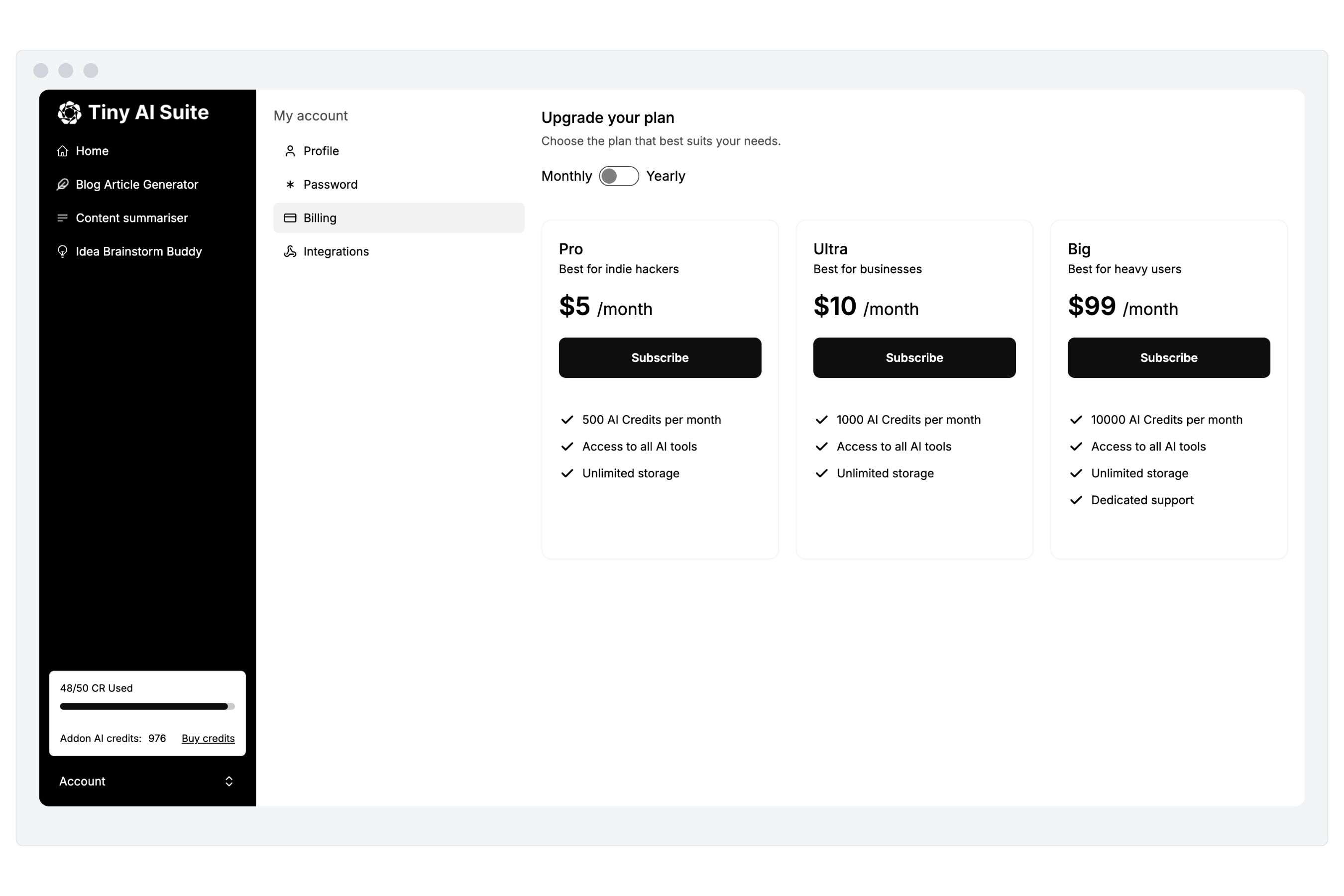

Monetize your AI

Sell your AI agents and tools as a complete product with subscription and AI credits billing. Generate revenue for your busienss.

“I've built with various AI tools and have found Appaca to be the most efficient and user-friendly solution.”

Cheyanne Carter

Founder & CEO, Edubuddy

Frequently Asked Questions

We are here to help!

Build AI products powered by GPT-4o mini Audio or Gemini 2.5 Flash

Create AI tools and agents on Appaca. Choose your model, build your product, launch to customers.