Build AI products powered by GPT-4o mini or Qwen3-Omni-Flash-Realtime - no coding required

Start buildingGPT-4o mini vs Qwen3-Omni-Flash-Realtime

Compare GPT-4o mini and Qwen3-Omni-Flash-Realtime. Build AI products powered by either model on Appaca.

Get startedModel Comparison

| Feature | GPT-4o mini | Qwen3-Omni-Flash-Realtime |

|---|---|---|

| Provider | OpenAI | Alibaba Cloud |

| Model Type | text | multimodal |

| Context Window | 128,000 tokens | 65,536 tokens |

| Input Cost | $0.15/ 1M tokens | $0.52/ 1M tokens |

| Output Cost | $0.60/ 1M tokens | $1.99/ 1M tokens |

Build with it | Build with GPT-4o mini | Build with Qwen3-Omni-Flash-Realtime |

Build AI products powered by any model

Appaca is a platform that enables you to create AI tools and agents. Choose the best model for your product and launch to customers.

Multi-Model Support

Power your AI product with GPT-4o mini, Qwen3-Omni-Flash-Realtime, or any supported model. Switch anytime.

No Infrastructure Needed

We handle all API integrations. You focus on building your AI product, not managing keys.

Launch & Monetize

Build once, sell to customers. Appaca handles payments, hosting, and scaling.

No credit card required • Build your first AI product in minutes

Strengths & Best Use Cases

GPT-4o mini

OpenAI1. Fast, cost-efficient performance

- Designed for low-latency, high-throughput workloads.

- Ideal for production systems where speed and budget matter more than deep reasoning power.

2. Great for focused NLP tasks

- Excels at classification, tagging, entity extraction, rewriting, paraphrasing, and SEO tasks.

- Strong at translation and keyword generation due to efficient language understanding.

3. Multimodal input capable (text + image)

- Accepts images for lightweight visual analysis, categorization, or extraction.

- Outputs text only, ensuring deterministic and easily integrated responses.

4. Supports advanced developer features

- Structured Outputs for predictable schemas.

- Function calling for building tool-augmented agents.

- Fully compatible with Batch API for large-scale processing.

5. Easy to fine-tune

- One of the best OpenAI models for domain-specific fine-tuning.

- Allows organizations to compress larger models' behavior (like GPT-4o) into a smaller footprint.

6. Suitable for distillation workflows

- Can approximate GPT-4o or GPT-5 outputs using distillation, dramatically reducing cost.

- Enables scalable deployment for high-volume applications.

7. Large context window for its size

- 128K context supports multi-step tasks, multi-document inputs, and long-running conversations.

- Useful for agents that need memory across extended sessions.

8. Reliable for commercial production

- Stable, predictable, and low-variance outputs make it ideal for automation and enterprise stacks.

- Works well in synchronous or asynchronous pipelines.

Qwen3-Omni-Flash-Realtime

Alibaba Cloud1. Real-time audio streaming

- Built-in VAD for detecting speech.

2. Multimodal reasoning

- Text, audio, image inputs.

3. Great for live agents

- Call centers, tutoring, interactive systems.

Prompts to Get Started

Use these prompts to power AI products you build on Appaca. Each works great with the models above.

Best for GPT-4o mini

textResume Bullet Point Optimizer

Transform weak resume responsibilities into strong, results-oriented bullet points.

Customer Advisory Board (CAB) Program

Design a customer advisory board that gathers persona leader insights to refine marketing strategy, strengthen your USP, and address evolving challenges.

Website Marketing Chatbot (Personalized Guidance)

Design a website chatbot that qualifies visitors, addresses persona challenges, and routes them to USP-focused content and next steps.

Best for Qwen3-Omni-Flash-Realtime

multimodalWelcome Email Series Generator

Create a complete automated welcome email sequence that nurtures new subscribers and drives conversions.

Video Marketing Strategy (Storytelling + Proof)

Build a video marketing strategy that uses storytelling to show how your USP transforms persona challenges into outcomes.

Brand Messaging Guide (Persona + USP)

Create a brand messaging guide with positioning, value props, proof points, and voice tailored to your persona’s challenges and your USP.

What AI product will you build?

Describe your AI product idea and Appaca will help you create it - powered by GPT-4o mini, Qwen3-Omni-Flash-Realtime, or any model you choose.

Free to start • No coding required • Launch to customers

See how Appaca works

Turn your AI ideas into AI products with the right AI model

Appaca is the complete platform for building AI agents, automations, and customer-facing interfaces. No coding required.

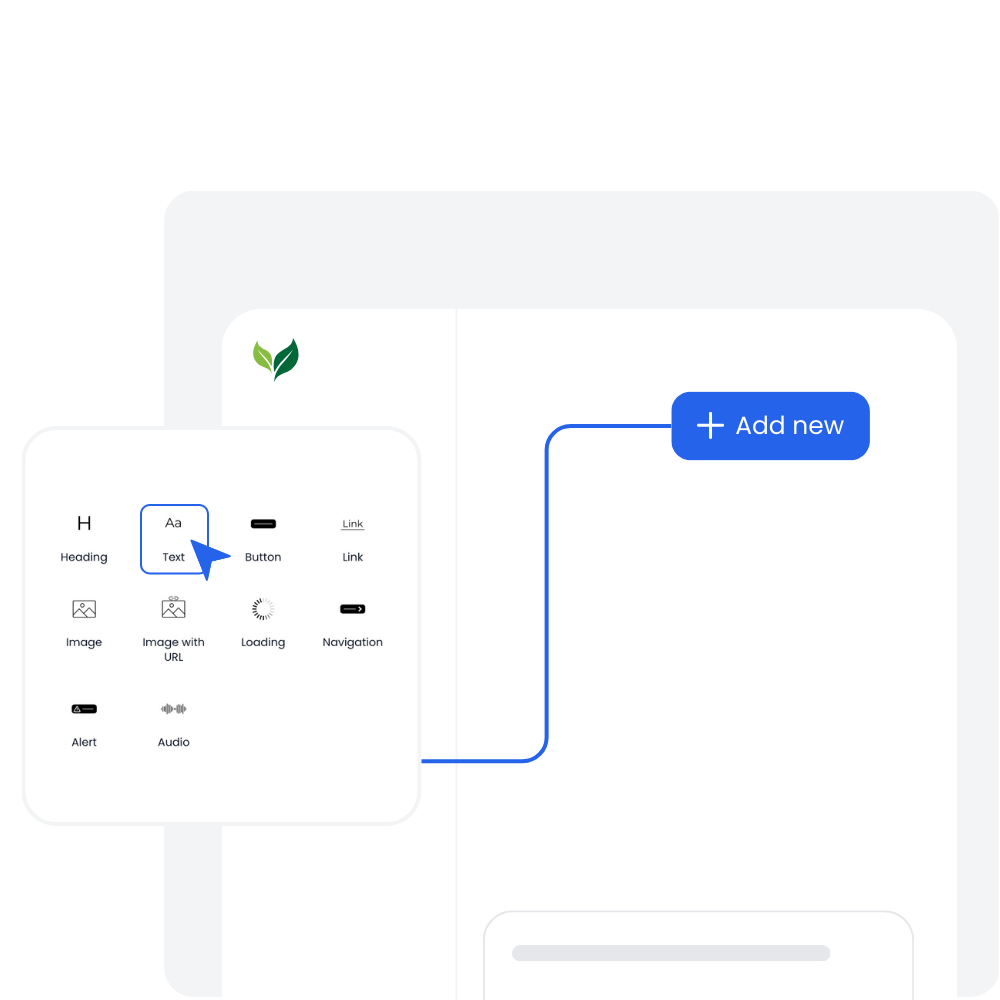

Customer-facing Interface

Create and style user interfaces for your AI agents and tools easily according to your brand.

Multimodel LLMs

Create, manage, and deploy custom AI models for text, image, and audio - trained on your own knowledge base.

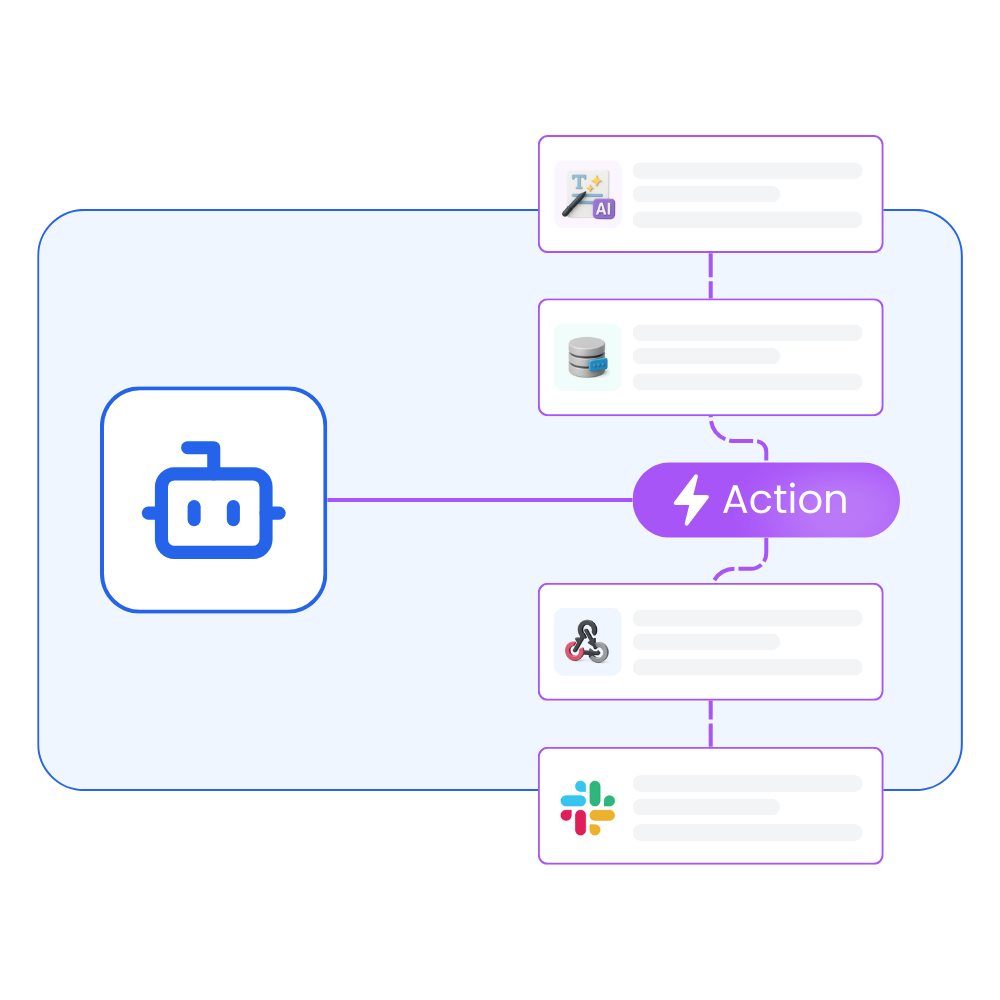

Agentic workflows and integrations

Create a workflow for your AI agents and tools to perform tasks and integrations with third-party services.

Trusted by incredible people at

All you need to launch and sell your AI products with the right AI model

Appaca provides out-of-the-box solutions your AI apps need.

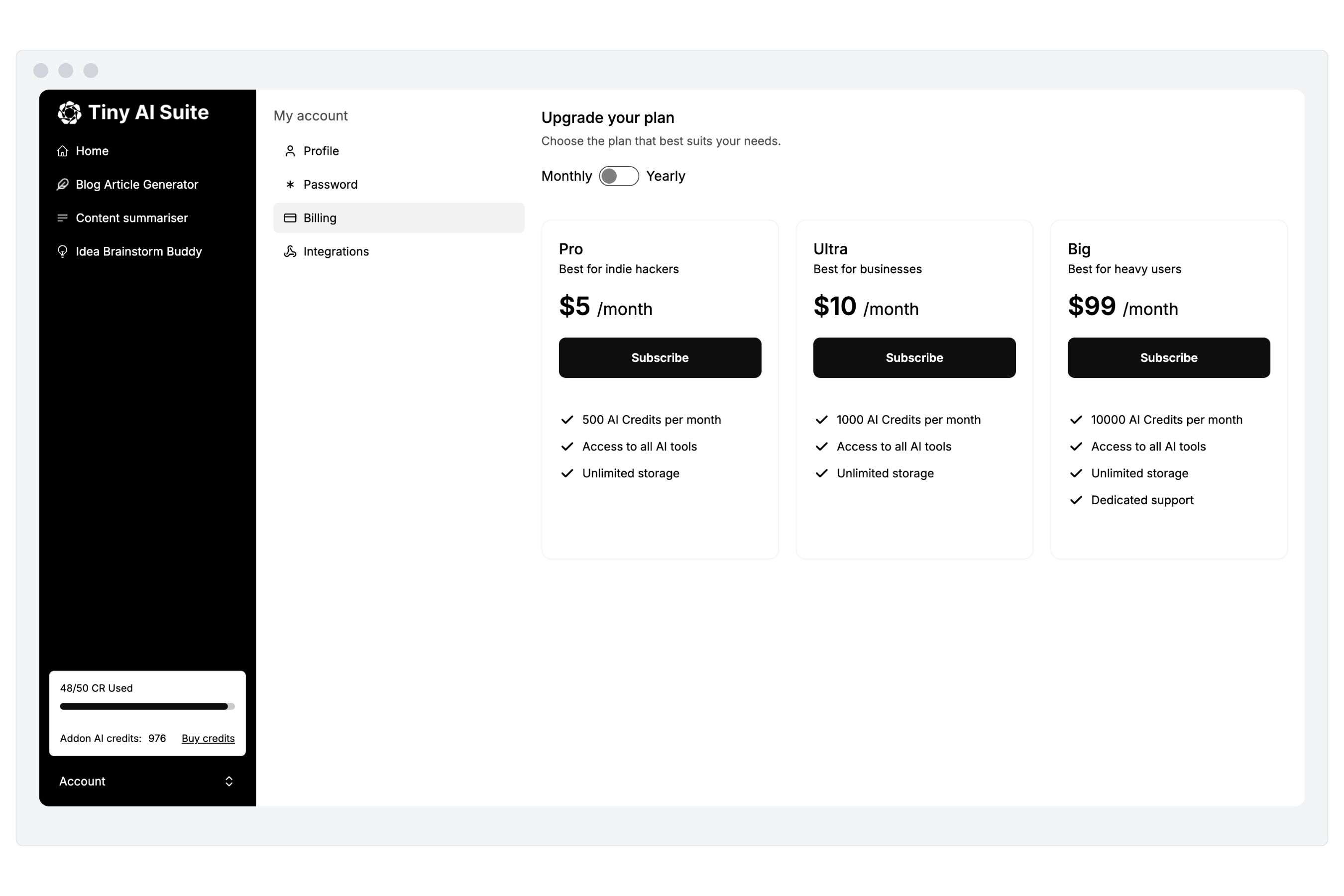

Monetize your AI

Sell your AI agents and tools as a complete product with subscription and AI credits billing. Generate revenue for your busienss.

“I've built with various AI tools and have found Appaca to be the most efficient and user-friendly solution.”

Cheyanne Carter

Founder & CEO, Edubuddy

Frequently Asked Questions

We are here to help!

Build AI products powered by GPT-4o mini or Qwen3-Omni-Flash-Realtime

Create AI tools and agents on Appaca. Choose your model, build your product, launch to customers.