Build AI products powered by GPT-5.1 Codex or o3-mini - no coding required

Start buildingGPT-5.1 Codex vs o3-mini

Compare GPT-5.1 Codex and o3-mini. Build AI products powered by either model on Appaca.

Get startedModel Comparison

| Feature | GPT-5.1 Codex | o3-mini |

|---|---|---|

| Provider | OpenAI | OpenAI |

| Model Type | text | text |

| Context Window | 400,000 tokens | 200,000 tokens |

| Input Cost | $1.25/ 1M tokens | $1.10/ 1M tokens |

| Output Cost | $10.00/ 1M tokens | $4.40/ 1M tokens |

Build with it | Build with GPT-5.1 Codex | Build with o3-mini |

Build AI products powered by any model

Appaca is a platform that enables you to create AI tools and agents. Choose the best model for your product and launch to customers.

Multi-Model Support

Power your AI product with GPT-5.1 Codex, o3-mini, or any supported model. Switch anytime.

No Infrastructure Needed

We handle all API integrations. You focus on building your AI product, not managing keys.

Launch & Monetize

Build once, sell to customers. Appaca handles payments, hosting, and scaling.

No credit card required • Build your first AI product in minutes

Strengths & Best Use Cases

GPT-5.1 Codex

OpenAI1. Purpose-Built for Agentic Coding

- Designed specifically for environments where the model acts as an autonomous or semi-autonomous coding agent.

- Optimized for multi-step reasoning in code tasks such as planning, refactoring, debugging, file generation, and tool coordination.

2. Enhanced Coding Intelligence

- Extends GPT-5.1's advanced reasoning capabilities to handle complex software architecture decisions.

- Better accuracy in code generation across languages (JavaScript, Python, TypeScript, Go, Rust, etc.).

- Produces cleaner, more idiomatic code aligned with modern frameworks and best practices.

3. Superior Tool Use & Code Navigation

- Excels at reading, understanding, and transforming multi-file codebases.

- Works well with Codex workflows that simulate real developer tooling.

- Strong at following function signatures, constraints, and code patterns within an existing project.

4. Long-Range Context Awareness

- 400,000-token context window enables the model to ingest large repositories or multiple files simultaneously.

- Supports deep analysis of project structures, dependencies, and cross-file logic.

5. Multi-Modal Development Capabilities

- Accepts text + image input and output - suitable for tasks like:

- Reading UI mockups or screenshots to generate code

- Understanding architectural diagrams

- Reviewing images of whiteboard sessions

6. Agentic Workflow Optimization

- Built to manage longer chains of thought and execution typically required in:

- Automated code repair

- Project bootstrapping

- Linting and migration tasks

- Long-running coding agents using planning + execution loops

7. Continually Updated Model Snapshot

- Codex-specific version receives regular upgrades behind the scenes.

- Ensures the latest coding improvements without requiring developers to update model names.

8. Reliable Instruction Following

- Highly consistent in honoring explicit constraints:

- Code styles

- Folder structures

- API contracts

- Framework conventions

9. Broad API Support

- Works across Chat Completions, Responses API, Realtime, Assistants, and more.

- Ideal for apps that need live, reasoning-heavy coding agents or generative dev environments.

o3-mini

OpenAI1. High-intelligence small reasoning model

- Delivers strong reasoning performance in a compact footprint.

- Ideal for tasks that need intelligence but must stay cost-efficient.

2. Excellent for developer workflows

- Supports Structured Outputs, function calling, and Batch API.

- Reliable for backend automation, agents, and data-processing pipelines.

3. Strong text reasoning capabilities

- Handles multi-step logic, natural language analysis, SQL translation, entity extraction, and content generation.

- Works well for landing pages, policy summaries, and knowledge extraction (as shown in built-in examples).

4. 200K context window

- Allows large documents, multi-step analysis, and long-running conversations.

- Reduces the need for aggressive chunking or external retrieval systems.

5. High 100K-token output limit

- Enables long explanations, multi-section documents, or detailed reasoning sequences.

6. Pure text-focused model

- Input/output is text-only (no image or audio support).

- Optimized for language-heavy reasoning and logic tasks.

7. Broad API compatibility

- Works across Chat Completions, Responses, Realtime, Assistants, Embeddings, Image APIs (as tools), and more.

- Supports streaming, function calling, and structured outputs.

8. Cost-efficient for production at scale

- Same cost/performance profile as o1-mini but with higher intelligence.

Prompts to Get Started

Use these prompts to power AI products you build on Appaca. Each works great with the models above.

Best for GPT-5.1 Codex

textCold Outreach Email Generator

Generate high-converting cold emails for sales, networking, or partnerships.

Professional Email Rewriter

Rewrite your rough drafts into polished, professional emails suitable for any business context.

Bug Fixer & Debugger

Identify bugs in your code, understand why they happen, and get a corrected version.

Best for o3-mini

textEducational Webinars (Deep-Dive Curriculum)

Create educational webinar topics and formats that teach persona-relevant skills and connect your USP to solving key challenges.

Marketing Budget & Resource Allocation Plan

Allocate marketing budget and resources across the highest-impact initiatives to communicate your USP and address persona challenges.

Value-Added Service Inquiry (Pre-Arrival Email)

Write a polite pre-arrival email to request fee waivers or courtesy upgrades like premium Wi‑Fi and early check-in.

What AI product will you build?

Describe your AI product idea and Appaca will help you create it - powered by GPT-5.1 Codex, o3-mini, or any model you choose.

Free to start • No coding required • Launch to customers

See how Appaca works

Turn your AI ideas into AI products with the right AI model

Appaca is the complete platform for building AI agents, automations, and customer-facing interfaces. No coding required.

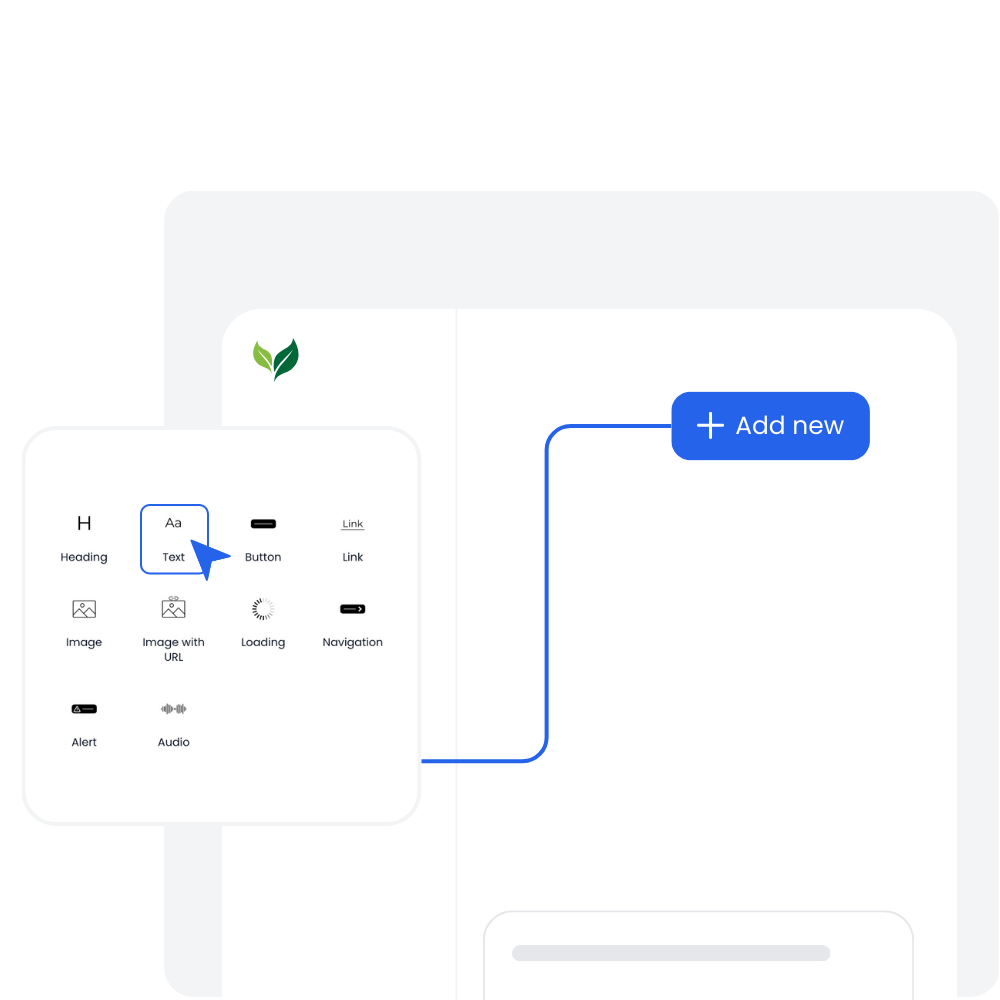

Customer-facing Interface

Create and style user interfaces for your AI agents and tools easily according to your brand.

Multimodel LLMs

Create, manage, and deploy custom AI models for text, image, and audio - trained on your own knowledge base.

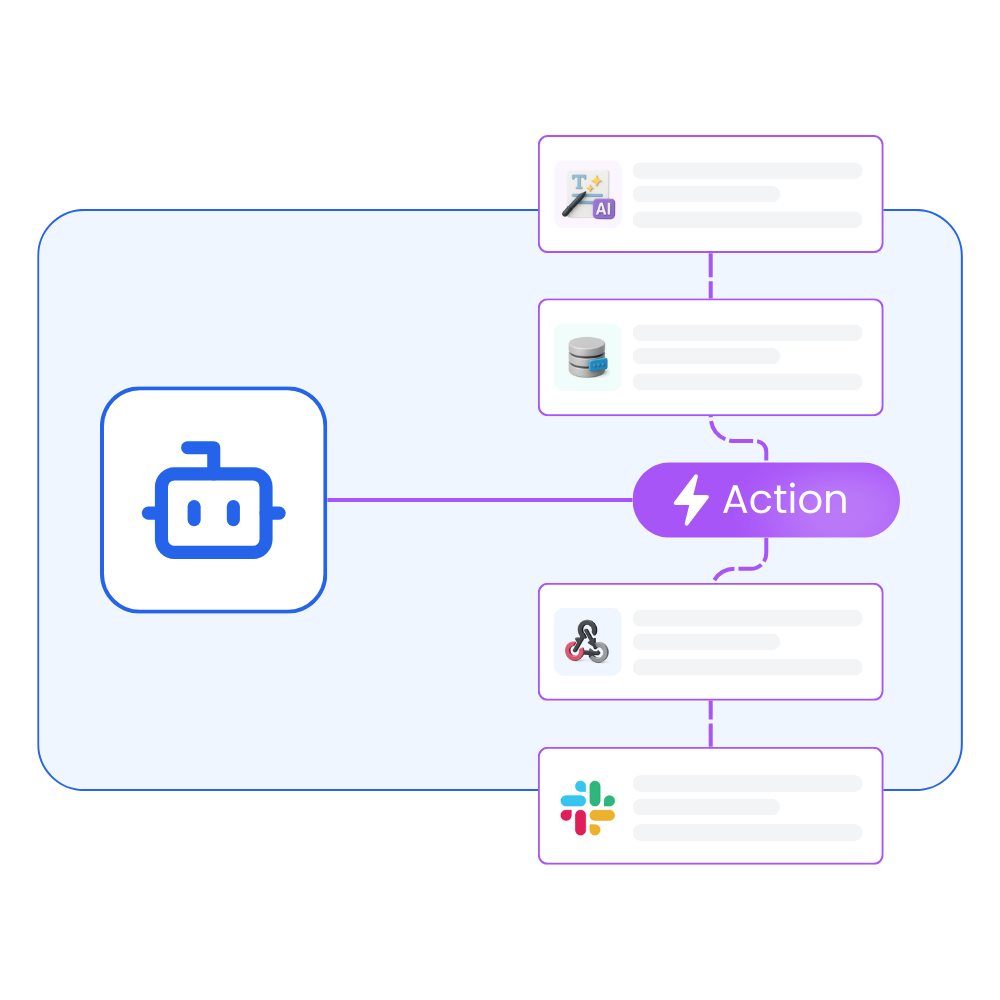

Agentic workflows and integrations

Create a workflow for your AI agents and tools to perform tasks and integrations with third-party services.

Trusted by incredible people at

All you need to launch and sell your AI products with the right AI model

Appaca provides out-of-the-box solutions your AI apps need.

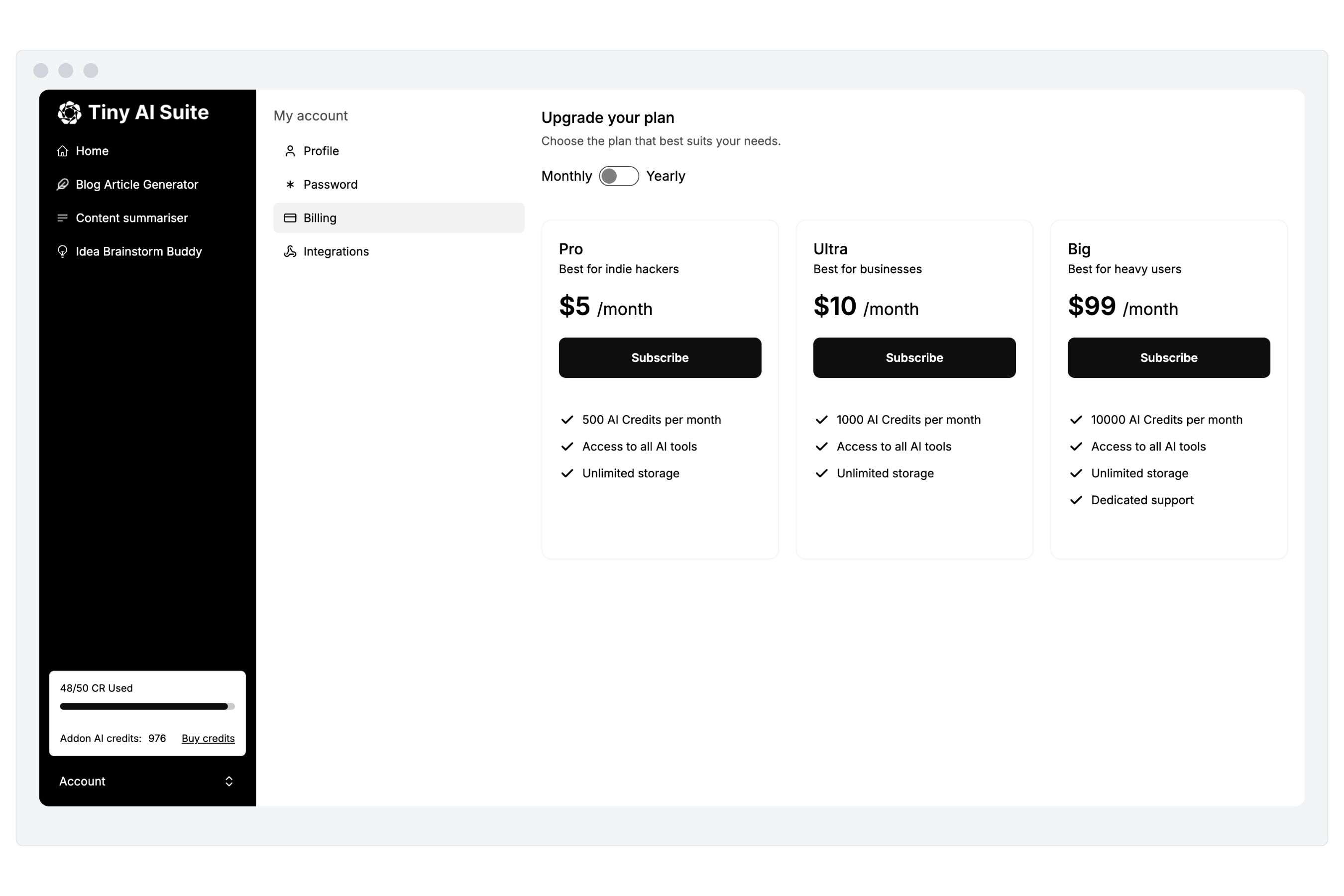

Monetize your AI

Sell your AI agents and tools as a complete product with subscription and AI credits billing. Generate revenue for your busienss.

“I've built with various AI tools and have found Appaca to be the most efficient and user-friendly solution.”

Cheyanne Carter

Founder & CEO, Edubuddy

Frequently Asked Questions

We are here to help!

Build AI products powered by GPT-5.1 Codex or o3-mini

Create AI tools and agents on Appaca. Choose your model, build your product, launch to customers.