Build AI products powered by GPT Image 1 or Gemini 2.5 Flash - no coding required

Start buildingGPT Image 1 vs Gemini 2.5 Flash

Compare GPT Image 1 and Gemini 2.5 Flash. Build AI products powered by either model on Appaca.

Get startedModel Comparison

| Feature | GPT Image 1 | Gemini 2.5 Flash |

|---|---|---|

| Provider | OpenAI | |

| Model Type | image | text |

| Context Window | N/A | 1,000,000 tokens |

| Input Cost | $5.00/ 1M tokens | $0.30/ 1M tokens |

| Output Cost | N/A | $2.50/ 1M tokens |

Build with it | Build with GPT Image 1 | Build with Gemini 2.5 Flash |

Build AI products powered by any model

Appaca is a platform that enables you to create AI tools and agents. Choose the best model for your product and launch to customers.

Multi-Model Support

Power your AI product with GPT Image 1, Gemini 2.5 Flash, or any supported model. Switch anytime.

No Infrastructure Needed

We handle all API integrations. You focus on building your AI product, not managing keys.

Launch & Monetize

Build once, sell to customers. Appaca handles payments, hosting, and scaling.

No credit card required • Build your first AI product in minutes

Strengths & Best Use Cases

GPT Image 1

OpenAI1. State-of-the-Art Image Generation

- Produces high-quality, detailed images optimized for realism, style control, and prompt fidelity.

- Designed to handle complex visual scenes, compositions, and lighting conditions.

2. Natively Multimodal Architecture

- Can understand and reason over both text and images as inputs.

- Ideal for workflows like:

- Editing based on reference images

- Expanding sketches or mockups

- Visual concept development

3. Flexible Output Resolutions & Quality Levels

- Supports multiple resolutions, including:

- 1024x1024

- 1024x1536

- 1536x1024

- Offers three quality tiers (Low, Medium, High) to optimize for:

- Cost efficiency

- Speed

- Maximum detail

4. Multiple Pricing Models

- Pay-per-token for multimodal input:

- Text input tokens

- Image input tokens

- Pay-per-image generation for final output:

- Low, Medium, and High quality tiers

- Enables businesses to balance cost and output needs.

5. Broad Use Cases

- Product photography and marketing assets

- Illustration, concept art, and creative ideation

- UX/UI mockups

- Style-guided image creation

- Generating reference images for design or storytelling

6. Supported Across Major API Endpoints

- Available via:

- Chat Completions

- Responses

- Realtime

- Assistants

- Images (generations, edits)

- Allows tight integration into automated creative pipelines or user-facing apps.

7. Simplified Model Behavior for Stability

- No streaming, function calling, structured outputs, or fine-tuning.

- Focused solely on high-quality image generation without extra logic layers.

8. Consistent Results via Snapshots

- Supports snapshots for version locking.

- Ensures long-term reproducibility across production pipelines.

9. Ideal For

- Designers, marketers, and creatives

- Product teams needing image assets

- App builders integrating image generation workflows

- Agencies producing visual content at scale

Gemini 2.5 Flash

Google1. Highly cost-efficient for large-scale workloads

- Extremely low input cost ($0.30/M) and affordable output cost.

- Built for production environments where throughput and budget matter.

- Significantly cheaper than competitors like o4-mini, Claude Sonnet, and Grok on text workloads.

2. Fast performance optimized for everyday tasks

- Ideal for summarization, chat, extraction, classification, captioning, and lightweight reasoning.

- Designed as a high-speed “workhorse model” for apps that require low latency.

3. Built-in “thinking budget” control

- Adjustable reasoning depth lets developers trade off latency vs. accuracy.

- Enables dynamic cost management for large agent systems.

4. Native multimodality across all major formats

- Inputs: text, images, video, audio, PDFs.

- Outputs: text + native audio synthesis (24 languages with the same voice).

- Great for conversational agents, voice interfaces, multimodal analysis, and captioning.

5. Industry-leading long context window

- 1,000,000 token context window.

- Supports long documents, multi-file processing, large datasets, and long multimedia sequences.

- Stronger MRCR long-context performance vs previous Flash models.

6. Native audio generation and multilingual conversation

- High-quality, expressive audio output with natural prosody.

- Style control for tones, accents, and emotional delivery.

- Noise-aware speech understanding for real-world conditions.

7. Strong benchmark performance for its cost

- 11% on Humanity's Last Exam (no tools) - competitive with Grok and Claude.

- 82.8% on GPQA diamond (science reasoning).

- 72.0% on AIME 2025 single-attempt math.

- Excellent multimodal reasoning (79.7% on MMMU).

- Leading long-context performance in its price tier.

8. Capable coding assistance

- 63.9% on LiveCodeBench (single attempt).

- 61.9%/56.7% on Aider Polyglot (whole/diff).

- Agentic coding support + tool use + function calling.

9. Fully supports tool integration

- Function calling.

- Structured outputs.

- Search-as-a-tool.

- Code execution (via Google Antigravity / Gemini API environments).

10. Production-ready availability

- Available in: Gemini App, Google AI Studio, Gemini API, Vertex AI, Live API.

- General availability (GA) with stable endpoints and documentation.

Prompts to Get Started

Use these prompts to power AI products you build on Appaca. Each works great with the models above.

Best for GPT Image 1

imageContent Marketing Strategy (Thought Leadership)

Create a persona-first content strategy that positions your brand as a thought leader and connects your USP to the challenges you solve.

Competitor Analysis (Differentiation Opportunities)

Analyze competitors and identify differentiation opportunities that strengthen your USP for your persona’s challenges.

Customer Loyalty Program (Rewards + Advocacy)

Create a loyalty program that rewards continued engagement and advocacy, reinforcing how your USP supports ongoing persona challenges.

Best for Gemini 2.5 Flash

textSocial Media Content Calendar Generator

Generate a complete month of social media content ideas organized by platform, content type, and posting schedule.

Differentiated Instruction Planner

Create tiered assignments and scaffolded activities that meet diverse learner needs while maintaining rigorous standards.

Cold Email Generator

Generate personalized cold emails that get responses using proven frameworks and personalization techniques.

What AI product will you build?

Describe your AI product idea and Appaca will help you create it - powered by GPT Image 1, Gemini 2.5 Flash, or any model you choose.

Free to start • No coding required • Launch to customers

See how Appaca works

Turn your AI ideas into AI products with the right AI model

Appaca is the complete platform for building AI agents, automations, and customer-facing interfaces. No coding required.

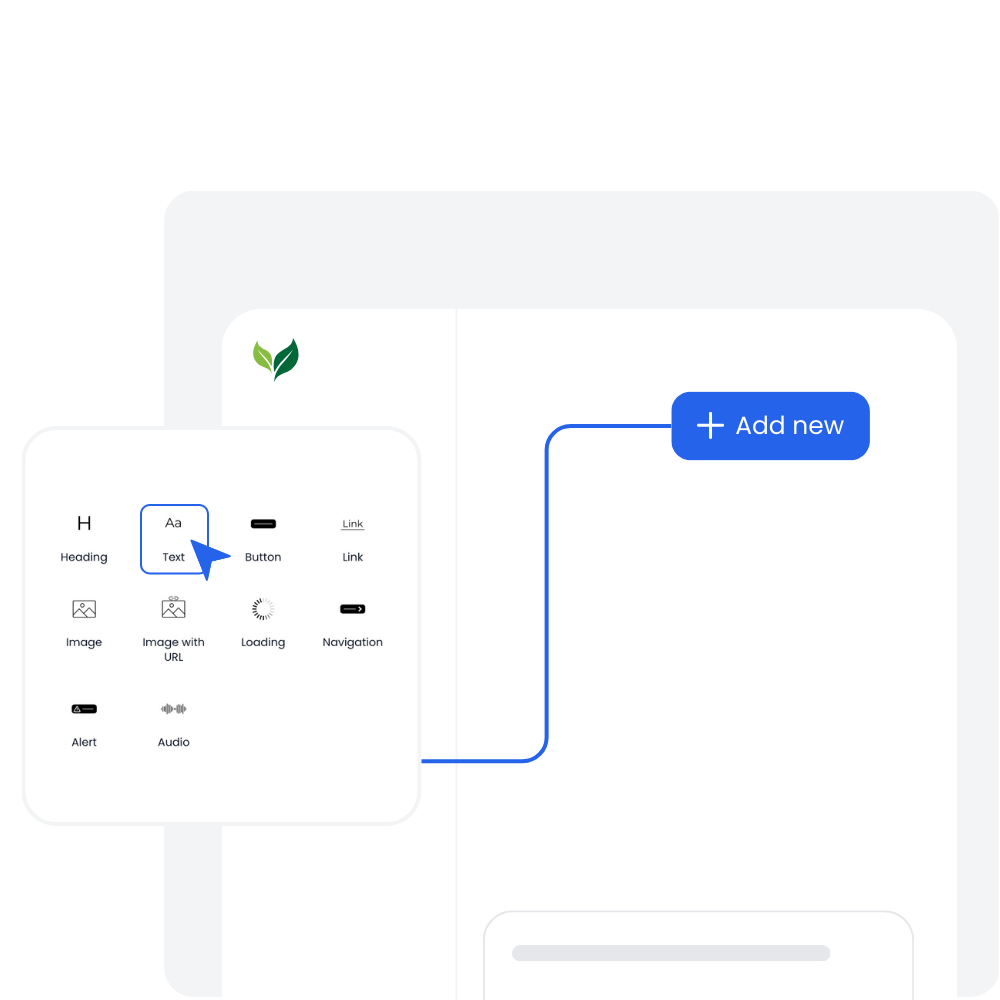

Customer-facing Interface

Create and style user interfaces for your AI agents and tools easily according to your brand.

Multimodel LLMs

Create, manage, and deploy custom AI models for text, image, and audio - trained on your own knowledge base.

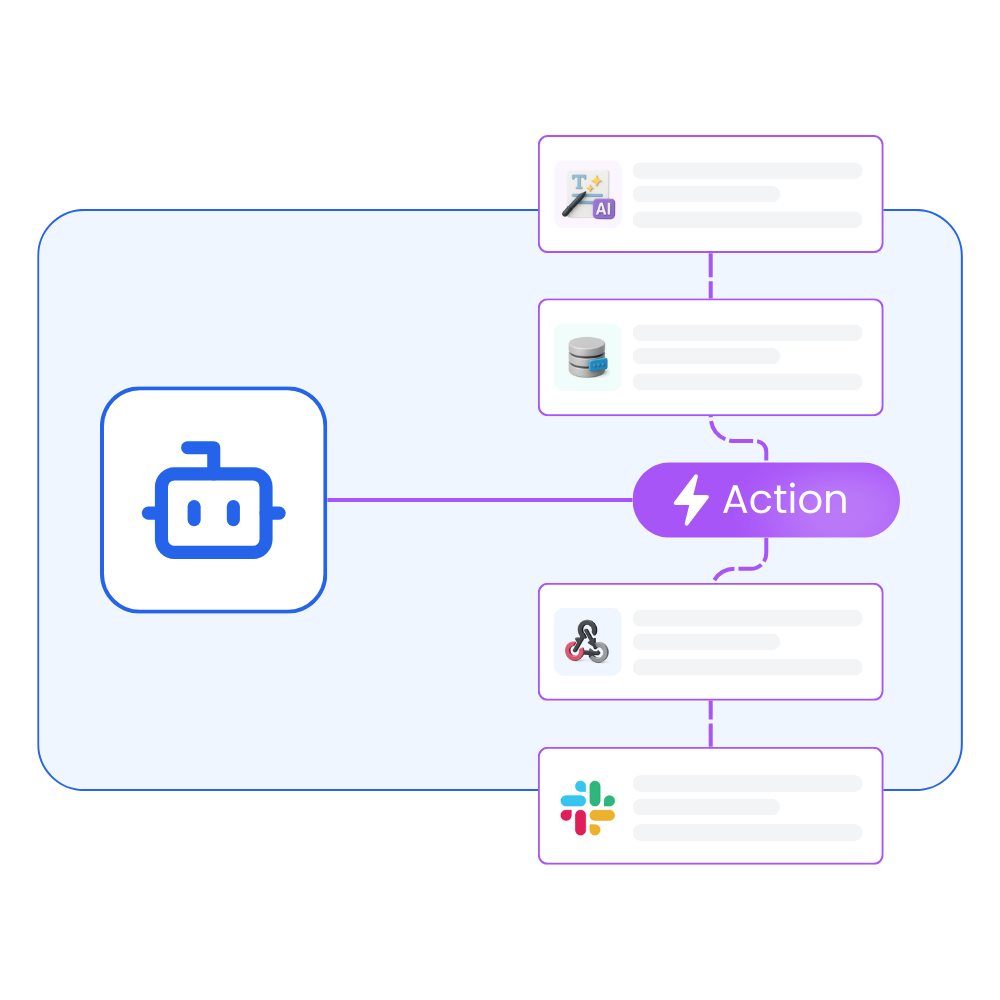

Agentic workflows and integrations

Create a workflow for your AI agents and tools to perform tasks and integrations with third-party services.

Trusted by incredible people at

All you need to launch and sell your AI products with the right AI model

Appaca provides out-of-the-box solutions your AI apps need.

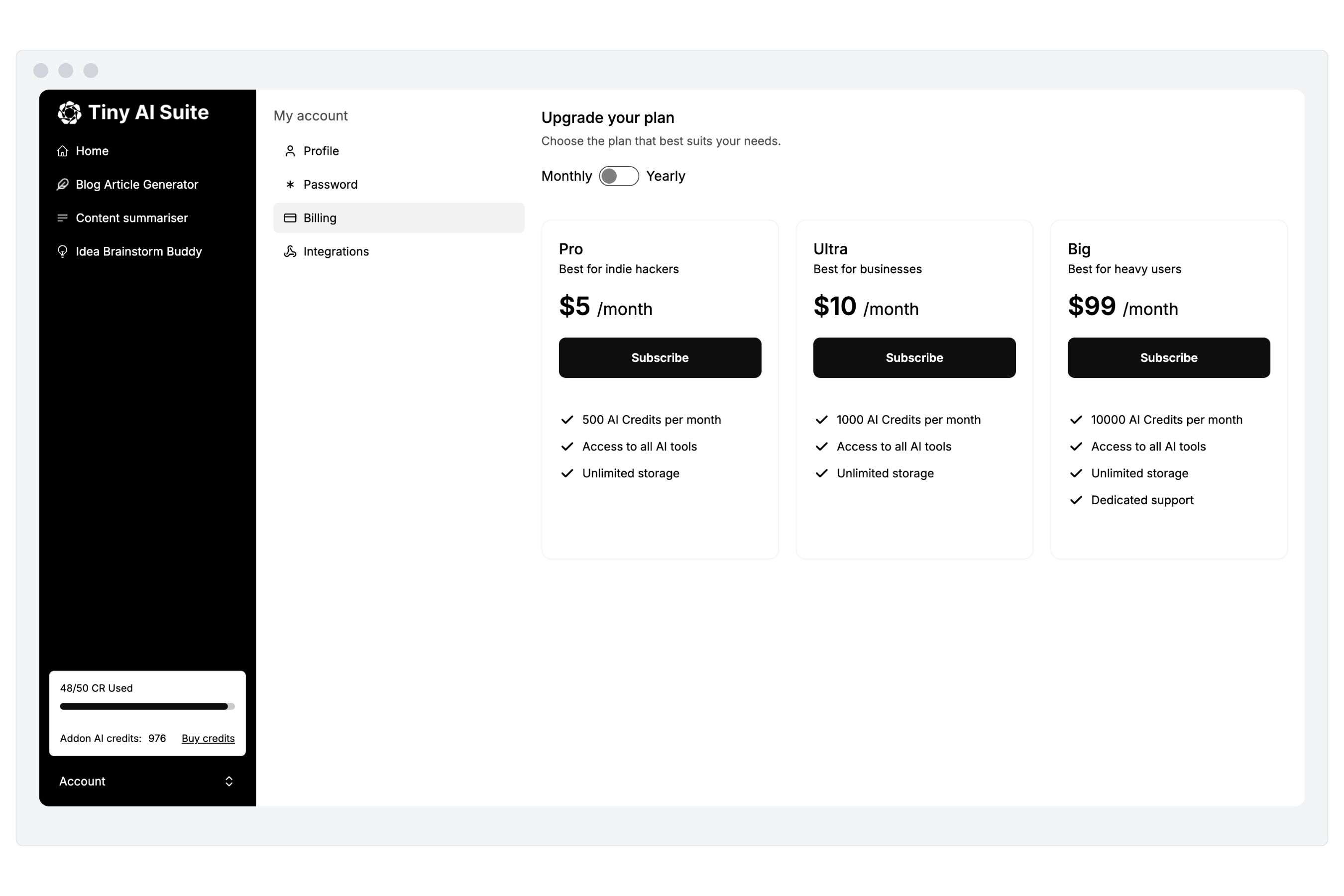

Monetize your AI

Sell your AI agents and tools as a complete product with subscription and AI credits billing. Generate revenue for your busienss.

“I've built with various AI tools and have found Appaca to be the most efficient and user-friendly solution.”

Cheyanne Carter

Founder & CEO, Edubuddy

Frequently Asked Questions

We are here to help!

Build AI products powered by GPT Image 1 or Gemini 2.5 Flash

Create AI tools and agents on Appaca. Choose your model, build your product, launch to customers.