Build AI products powered by o4-mini or Qwen-Max - no coding required

Start buildingo4-mini vs Qwen-Max

Compare o4-mini and Qwen-Max. Build AI products powered by either model on Appaca.

Get startedModel Comparison

| Feature | o4-mini | Qwen-Max |

|---|---|---|

| Provider | OpenAI | Alibaba Cloud |

| Model Type | text | text |

| Context Window | 200,000 tokens | 32,768 tokens |

| Input Cost | $1.10/ 1M tokens | $1.60/ 1M tokens |

| Output Cost | $4.40/ 1M tokens | $6.40/ 1M tokens |

Build with it | Build with o4-mini | Build with Qwen-Max |

Build AI products powered by any model

Appaca is a platform that enables you to create AI tools and agents. Choose the best model for your product and launch to customers.

Multi-Model Support

Power your AI product with o4-mini, Qwen-Max, or any supported model. Switch anytime.

No Infrastructure Needed

We handle all API integrations. You focus on building your AI product, not managing keys.

Launch & Monetize

Build once, sell to customers. Appaca handles payments, hosting, and scaling.

No credit card required • Build your first AI product in minutes

Strengths & Best Use Cases

o4-mini

OpenAI1. Fast and efficient reasoning

- Provides strong reasoning capabilities with significantly lower latency and cost compared to larger o-series models.

- Ideal for lightweight reasoning tasks, logic steps, and quick multi-step thinking.

2. Optimized for coding tasks

- Performs exceptionally well in code generation, debugging, and explanation.

- Useful for IDE integrations, coding assistants, and developer tools with tight latency budgets.

3. Strong visual reasoning

- Accepts image inputs for tasks such as diagram interpretation, charts, UI analysis, and visual logic.

- Great for hybrid text-image reasoning flows.

4. Large 200K-token context window

- Capable of processing long documents, multi-file codebases, or extended analysis.

- Reduces need for chunking or external retrieval pipelines.

5. High 100K-token output limit

- Supports lengthy reasoning sequences, full codebase explanations, or multi-section documents.

6. Broad API compatibility

- Available in Chat Completions, Responses, Realtime, Assistants, Batch, Embeddings, and Image workflows.

- Supports streaming, function calling, structured outputs, and fine-tuning.

7. Cost-efficient for production

- Lower input/output pricing makes it suitable for large-scale deployments, SaaS products, and recurring tasks.

8. Succeeded by GPT-5 mini

- GPT-5 mini offers improved speed, reasoning power, and pricing, but o4-mini remains a strong option for cost-sensitive workloads.

Qwen-Max

Alibaba Cloud1. Strong general-purpose reasoning

- Great for coding, analysis, creation, and multi-step tasks.

2. Stable commercial-grade model

- Predictable output quality and long-term stability.

3. Supports batch operations

- Batch inference is 50% cheaper.

4. Good for production agents

- Reliable instruction following and structured output.

Prompts to Get Started

Use these prompts to power AI products you build on Appaca. Each works great with the models above.

Best for o4-mini

textThought Leadership Interviews (Experts + Angles)

Plan a thought leadership interview series featuring experts discussing persona challenges and how your USP relates to solutions.

Co-Marketing Partnerships (Complementary Brands)

Develop a co-marketing partnership strategy with brands serving the same persona, amplifying reach while reinforcing your USP and persona challenges.

Create Discovery Questions (Interrogatories + RFPs + RFAs)

Generate clear, organized discovery questions and requests tailored to a specific legal issue and case theory.

Best for Qwen-Max

textResume Bullet Point Optimizer

Transform weak resume responsibilities into strong, results-oriented bullet points.

Entity-Based Content Enhancement (Semantic SEO)

Generate named entities and natural insertion points to improve semantic depth and topical coverage.

Corporate Rate Negotiation: Email Script Generator

Draft a professional, data-backed email to negotiate corporate hotel rates and value-add perks.

What AI product will you build?

Describe your AI product idea and Appaca will help you create it - powered by o4-mini, Qwen-Max, or any model you choose.

Free to start • No coding required • Launch to customers

See how Appaca works

Turn your AI ideas into AI products with the right AI model

Appaca is the complete platform for building AI agents, automations, and customer-facing interfaces. No coding required.

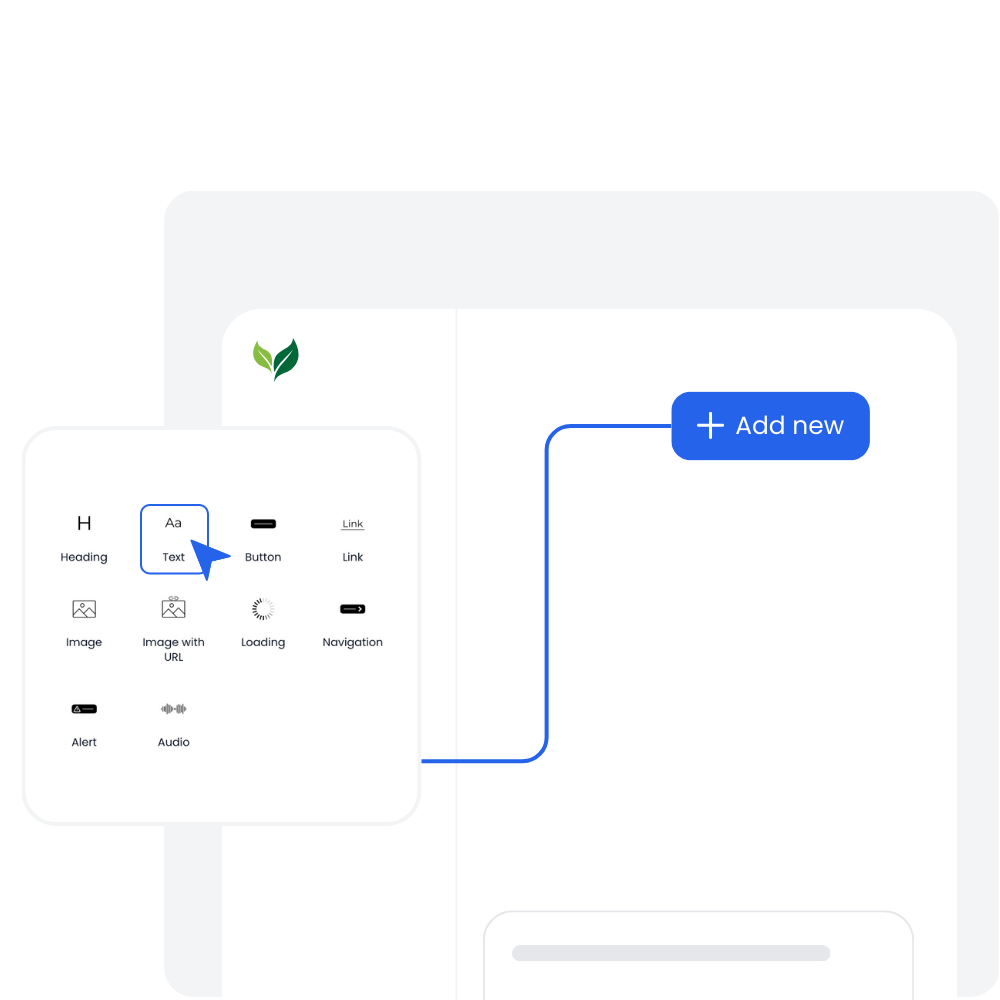

Customer-facing Interface

Create and style user interfaces for your AI agents and tools easily according to your brand.

Multimodel LLMs

Create, manage, and deploy custom AI models for text, image, and audio - trained on your own knowledge base.

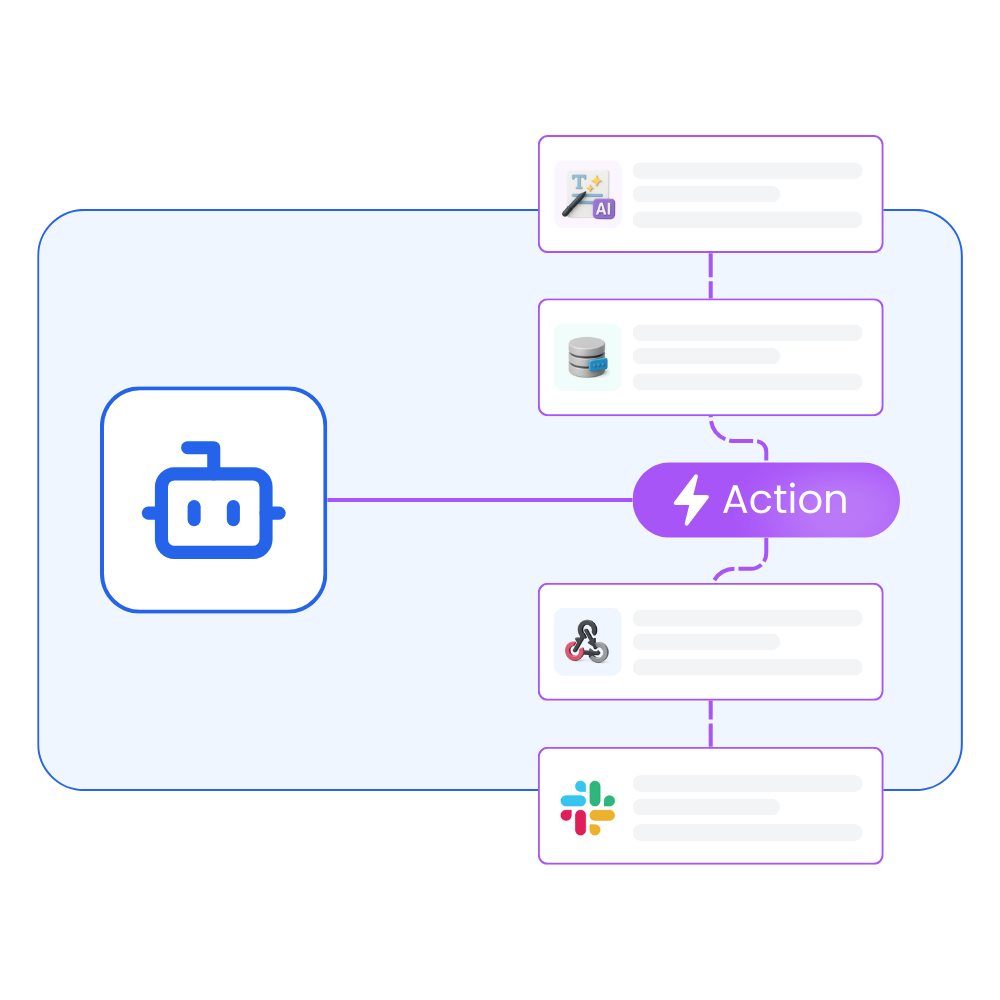

Agentic workflows and integrations

Create a workflow for your AI agents and tools to perform tasks and integrations with third-party services.

Trusted by incredible people at

All you need to launch and sell your AI products with the right AI model

Appaca provides out-of-the-box solutions your AI apps need.

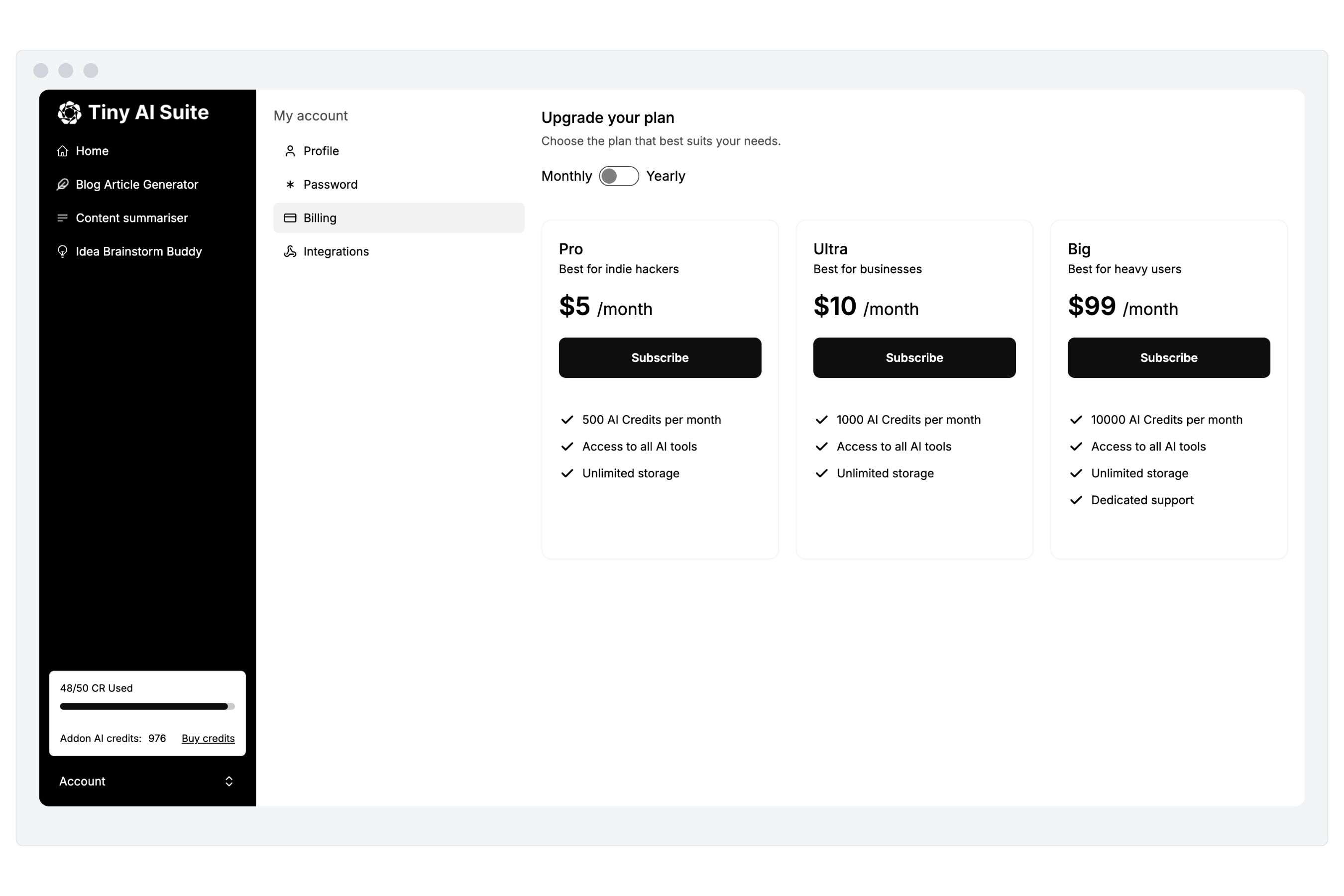

Monetize your AI

Sell your AI agents and tools as a complete product with subscription and AI credits billing. Generate revenue for your busienss.

“I've built with various AI tools and have found Appaca to be the most efficient and user-friendly solution.”

Cheyanne Carter

Founder & CEO, Edubuddy

Frequently Asked Questions

We are here to help!

Build AI products powered by o4-mini or Qwen-Max

Create AI tools and agents on Appaca. Choose your model, build your product, launch to customers.