Build AI products powered by GPT-4.1 or GPT-OSS 20B - no coding required

Start buildingGPT-4.1 vs GPT-OSS 20B

Compare GPT-4.1 and GPT-OSS 20B. Build AI products powered by either model on Appaca.

Get startedModel Comparison

| Feature | GPT-4.1 | GPT-OSS 20B |

|---|---|---|

| Provider | OpenAI | OpenAI |

| Model Type | text | text |

| Context Window | 1,047,576 tokens | 128,000 tokens |

| Input Cost | $2.00/ 1M tokens | $0.00/ 1M tokens |

| Output Cost | $8.00/ 1M tokens | $0.00/ 1M tokens |

Build with it | Build with GPT-4.1 | Build with GPT-OSS 20B |

Build AI products powered by any model

Appaca is a platform that enables you to create AI tools and agents. Choose the best model for your product and launch to customers.

Multi-Model Support

Power your AI product with GPT-4.1, GPT-OSS 20B, or any supported model. Switch anytime.

No Infrastructure Needed

We handle all API integrations. You focus on building your AI product, not managing keys.

Launch & Monetize

Build once, sell to customers. Appaca handles payments, hosting, and scaling.

No credit card required • Build your first AI product in minutes

Strengths & Best Use Cases

GPT-4.1

OpenAI1. Smartest non-reasoning model

- Highest intelligence among models without a reasoning step.

- Great for tasks where speed + accuracy matter without deep chain-of-thought.

2. Excellent instruction following

- Very strong at structured tasks, formatting, and precise execution.

- Ideal for productized workflows and deterministic outputs.

3. Reliable tool calling

- Works smoothly with Web Search, File Search, Image Generation, and Code Interpreter.

- Supports MCP and advanced tool-enabled API flows.

4. Large 1M-token context window

- Allows extremely long conversations, large documents, and multi-file use cases.

- Handles context-heavy tasks without requiring chunking.

5. Low latency (no reasoning step)

- Faster responses than GPT-5 family when reasoning mode isn't required.

- More predictable timing for production use.

6. Multimodal input

- Accepts text + image.

- Output is text only.

7. Supports fine-tuning

- Can be fine-tuned for specialized tasks.

- Also supports distillation for smaller custom models.

GPT-OSS 20B

OpenAI- Open-weight / Apache 2.0 licensed: you can use, modify, and deploy freely (commercially & academically) under permissive terms.

- Large model size (≈ 21B parameters) with Mixture-of-Experts (MoE) architecture: only ~3.6B parameters active per token, yielding efficient inference.

- Very long context window support: up to ~128 K tokens (or ~131 K tokens per some sources) enabling in-depth reasoning, long documents, or multi-turn context.

- Adjustable reasoning effort: you can trade latency vs quality by tuning “reasoning effort” levels.

- Efficient hardware requirements (for its class): designed to run on a single 16 GB-class GPU or optimized local deployments for lower latency applications.

- Strong for tasks such as reasoning, tool-use, structured output, chain-of-thought debugging: because the model is open and you can inspect its chain of thought.

- Flexibility: since weights are available, you can self-host, fine-tune, or deploy offline, giving more control than closed API models.

Prompts to Get Started

Use these prompts to power AI products you build on Appaca. Each works great with the models above.

Best for GPT-4.1

textEmail Subject Line Generator

Generate high-converting email subject lines that boost open rates using proven psychological triggers and A/B testing frameworks.

Email Campaign (Buyer Journey Nurture)

Create an email nurture campaign that guides your persona through the buyer journey while highlighting your USP and solving key challenges.

Interactive Quiz (Diagnose Challenges + Recommend Solutions)

Design a website quiz that helps your persona self-diagnose challenges and recommends next steps aligned to your USP.

Best for GPT-OSS 20B

textCraft Catchy Sales Emails

Write high-converting sales emails with strong hooks, clear value, and a single focused CTA-optimized for your audience and offer.

Value-Added Service Inquiry (Pre-Arrival Email)

Write a polite pre-arrival email to request fee waivers or courtesy upgrades like premium Wi‑Fi and early check-in.

Social Media Campaign (USP + Challenge Angles)

Design a social media campaign that engages your persona with informative and entertaining content tied to your USP and their challenges.

What AI product will you build?

Describe your AI product idea and Appaca will help you create it - powered by GPT-4.1, GPT-OSS 20B, or any model you choose.

Free to start • No coding required • Launch to customers

See how Appaca works

Turn your AI ideas into AI products with the right AI model

Appaca is the complete platform for building AI agents, automations, and customer-facing interfaces. No coding required.

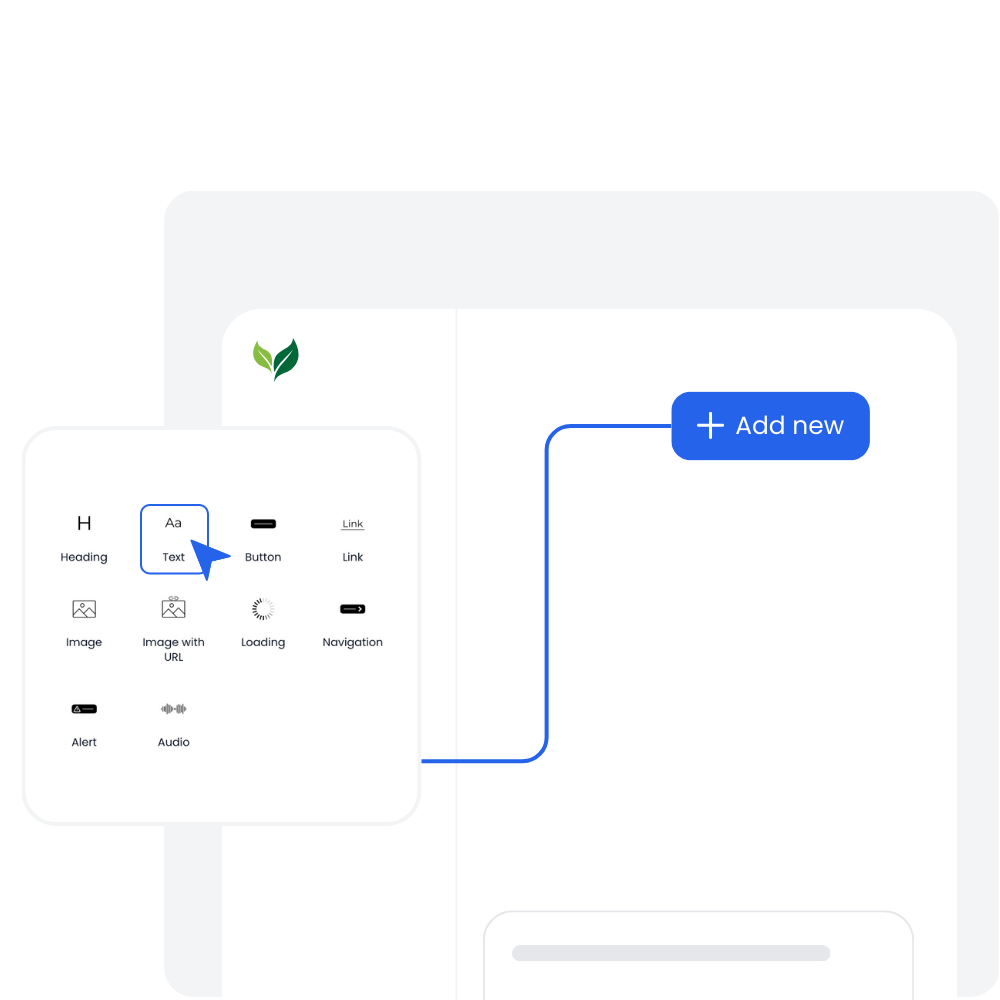

Customer-facing Interface

Create and style user interfaces for your AI agents and tools easily according to your brand.

Multimodel LLMs

Create, manage, and deploy custom AI models for text, image, and audio - trained on your own knowledge base.

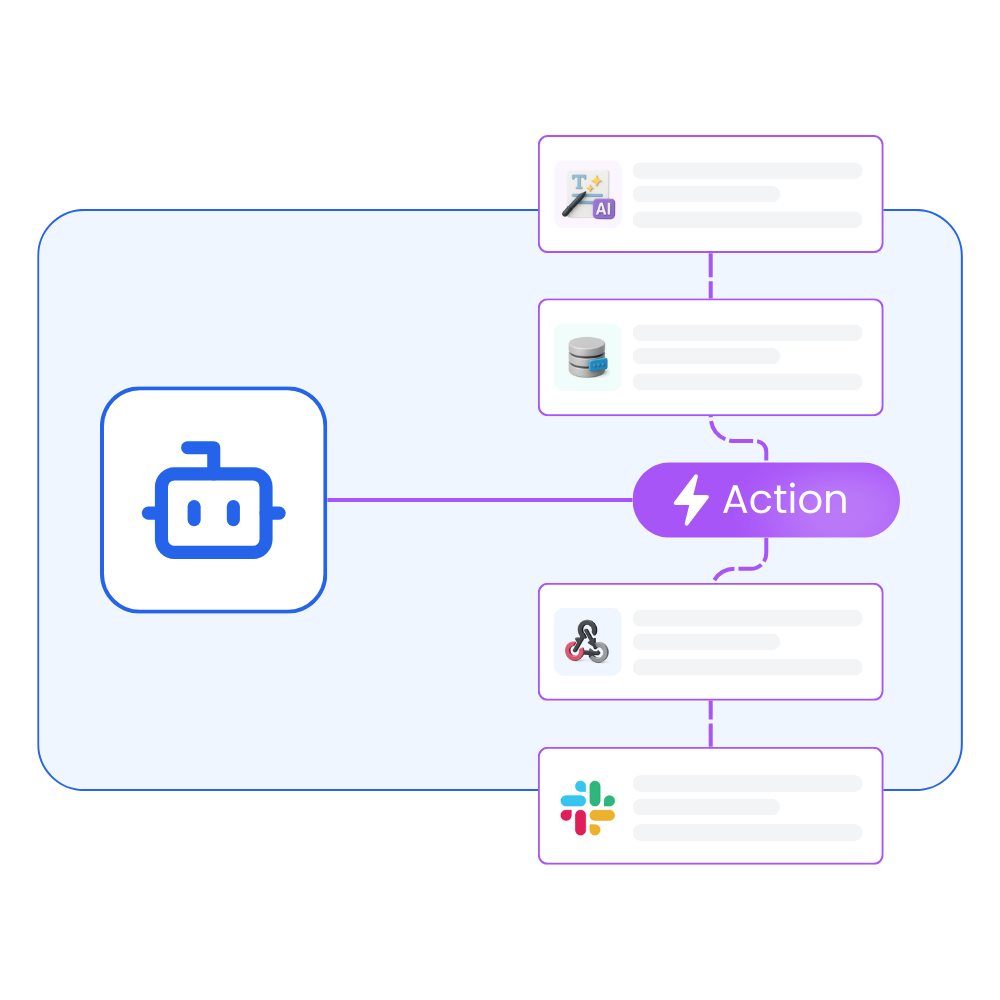

Agentic workflows and integrations

Create a workflow for your AI agents and tools to perform tasks and integrations with third-party services.

Trusted by incredible people at

All you need to launch and sell your AI products with the right AI model

Appaca provides out-of-the-box solutions your AI apps need.

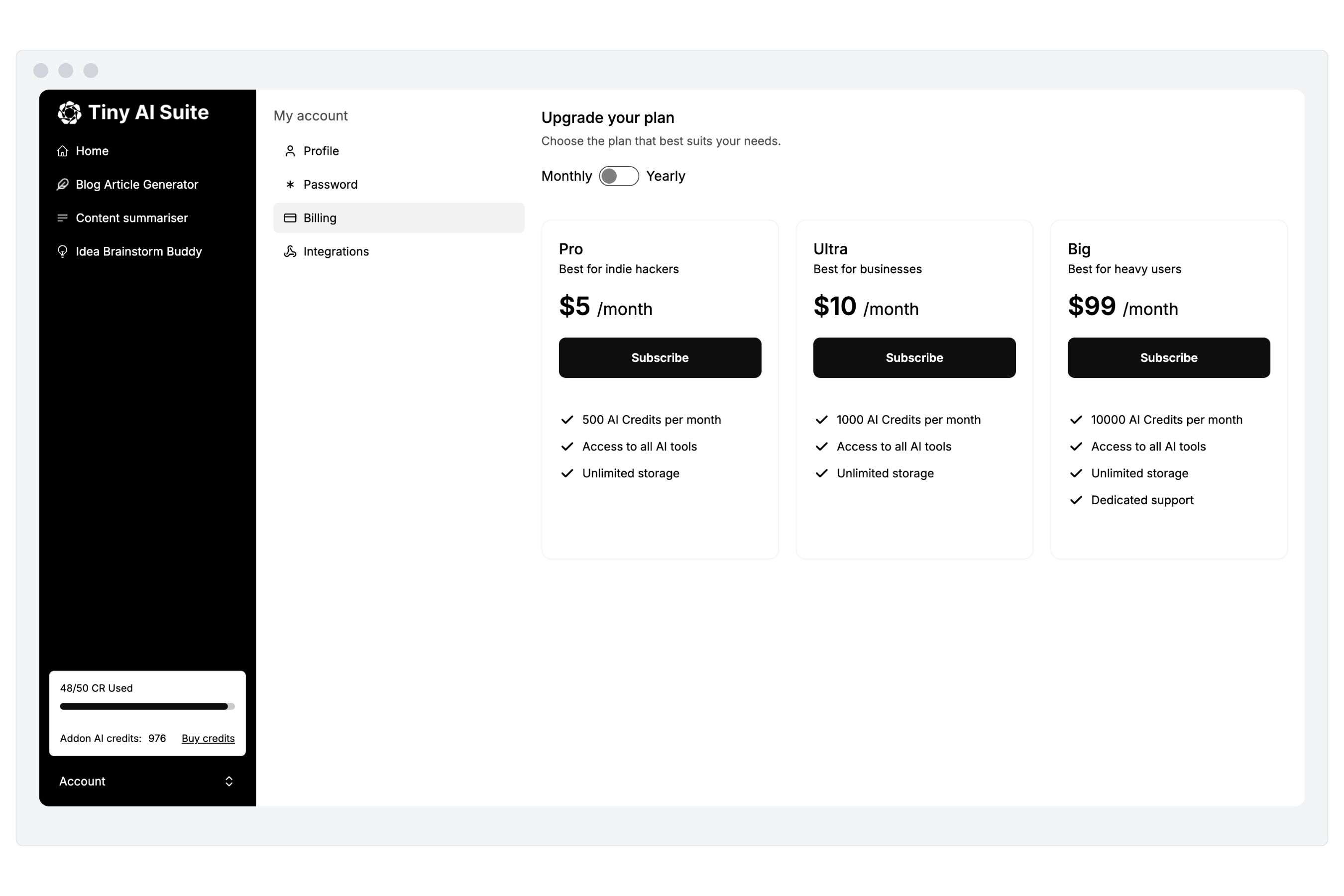

Monetize your AI

Sell your AI agents and tools as a complete product with subscription and AI credits billing. Generate revenue for your busienss.

“I've built with various AI tools and have found Appaca to be the most efficient and user-friendly solution.”

Cheyanne Carter

Founder & CEO, Edubuddy

Frequently Asked Questions

We are here to help!

Build AI products powered by GPT-4.1 or GPT-OSS 20B

Create AI tools and agents on Appaca. Choose your model, build your product, launch to customers.